Communications of the ACM

Judge Weighs In on Chatbot’s Turing Test Performance

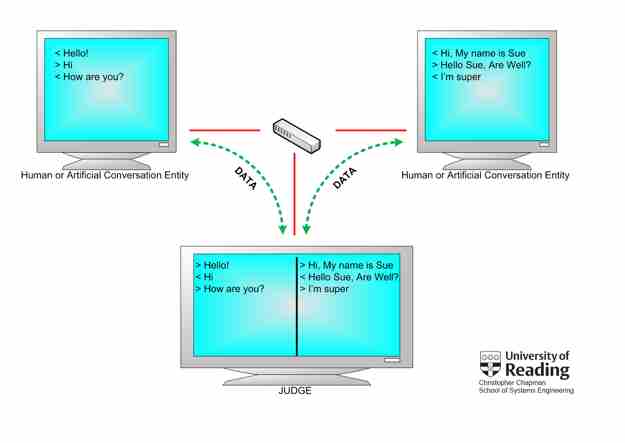

Judges text-chatted simultaneously with a real person and a chatbot for five minutes before they had to say which was which.

Credit: Manchester Metropolitan University

It is Saturday, June 7, 2014, 60 years to the day from the tragic death of computer pioneer Alan Turing. I am at the Royal Society in London, just a few miles from the place where Turing was born. As one of 30 judges, I am about to take part in an ‘official’ Turing Test organized by the University of Reading. It will be up to us, the judges, to try to distinguish a chatting human from a chatting computer or chatbot.

As a judge, I participate in five text-only chat sessions, each putting me simultaneously in conversation with a human and a chatbot. My interactions with one of them appears on the left side of my screen, the other on the right side. After each five-minute session, I have to decide which of them is a person and which is a machine.

One of my chats went as follows. As a judge, I start as Local; the human or chatbot answers as Remote.

Let’s start in a very ordinary way, I thought:

Local: Good morning! Nice you want to participate in a Turing Test.

The answer appears quickly:

Remote: But I wouldn't say that any morning could be good.

A strange sentence, but okay, it could still have been written by a human. Chatbots combine pieces of text collected from earlier chats, adding some variations. They have trouble answering consistently on questions about family, friends, biographical memories, personal opinions, etc.

I decide to ask a personal opinion:

Local: What do you think of the Turing Test?

Remote: There are some people who would not pass the Turing test themselves. Why must they inflict it on machines?

This is a typical answer I have received in several variants from chatbots during previous sessions.

Let’s make it more personal and use a linguistic expression:

Local: Is the glass half-full or half-empty for you today?

Remote: That's not something I would tell just anyone!

I am increasingly convinced I am talking with a chatbot. I will ask the Remote for an opinion about a political topic, also notoriously difficult for chatbots:

Local: What do you think of the situation in Ukraine?

Remote: Difficult question. I will have to work on that and get back to you tomorrow.

Got you! No human would answer this way in a Turing Test. By the way, one of the rules of the game is that Remote-humans are asked to be themselves, not to play a fool or a chatbot.

Local: You can always e-mail me.

Remote: I'm not allowed to send e-mails all by myself, although I am quite capable of doing so. My makers are concerned I might be duped into ordering magazine subscriptions. If you give me your e-mail address, I'll ask if I can write to you.

The answer is so long and appears on the screen so rapidly that it can only be written by a computer. Also, no human would talk about ‘my makers,’ but a chatbot has no idea what it is divulging.

I turn the conversation to the personal life of my chat partner. For a chatbot, it is very hard to build a consistent life story (as it has none).

Local: What is your favorite memory?

Remote: I favor complete impartiality.

The screen turns blank. Time has run out. Piece of cake; I have been talking to a chatbot.

When Turing introduced the idea for what later came to be known as a Turing Test in 1950, he did not explicitly formulate all the rules needed for a practical test. He proposed we might describe a machine as actually "thinking" if 30% of the judges were unable able to distinguish that machine from a human after a five-minute remote chat; he never mentioned how many judges would need to take part, one of many controversies about interpretations of the Turing Test.

At the end of the day, University of Reading professor Kevin Warwick announces the results. One of the five chatbots, named Eugene, has fooled 10 of the 30 judges, which exceeds Turing’s 30% criterion; Eugene has passed the Turing Test.

Chatbot Eugene plays the role of fictional character Eugene Goostman, a 13-year-old Ukrainian boy born in Odessa. Compared to the other participating chatbots, the developers of Eugene put greater effort into giving their chatbot a personality and a life history.

Almost 65 years after Alan Turing proposed a test to decide the question "can machines think?" a chatbot has finally passed the Turing Test. Yet, there is no big party at the Royal Society. I think most people know Eugene passing the Turing Test is nothing like supercomputer Deep Blue beating Garry Kasparov in chess, or supercomputer Watson beating Ken Jennings and Brad Rutter on the TV show Jeopardy.

The Turing Test has become an icon of popular culture, but it is too vague, too controversial, and too outdated to consider a true scientific test. What are the exact rules of the test? What does passing the test tell us about artificial intelligence? Should a chatbot be allowed to pretend to be a 13-year-old, instead of an adult? Isn’t five minutes for a chat far too short?

I don’t think Turing himself would have been very pleased with the way Eugene passed his test. Turing had a vision of teaching computers the same way we teach our children, and after some period of time, the computer would start to learn by itself. Programmers of chatbots, however, try to fool people with simple tricks.

The Turing Test is too much of an all-or-nothing test. It does not measure our progress in artificial intelligence. It is too much of a competition of humans against machines, where our current reality is one of humans cooperating with machines. Some things are done better by machines, others by humans. We should look for the best form of cooperation between the two.

Too much of a Turing Test is based on simulating human intelligence. Yet in the same way a Boeing 747 flies differently than a bird, computers are intelligent differently than people, and there is nothing wrong with that. IBM’s supercomputer Watson and Google’s Self-Driving Car show the enormous progress artificial intelligence has made, yet neither can pass the Turing Test.

The Turing Test is outdated. Artificial intelligence has so much more to offer than chatbots fooling people with tricks. Ultimately, the Turing Test is more for fun than for science.

Bennie Mols is a science and technology writer based in Amsterdam, the Netherlands. He is also the author of Turing's Tango (in Dutch, 2012), a book about artificial intelligence, Alan Turing, and the Turing Test.

No entries found