Communications of the ACM

What Does 'Big Data' Mean? (Part 2)

In the previous blog post, I talked about four possible meanings of "big data," namely

Big volume – small analytics

Big volume – big analytics

Big velocity

Big variety

and discussed the first use case. In this posting, I continue with a discussion of the second use case. I begin with an example of what I mean by big analytics on big volumes of data. It should be noted that I am using a somewhat contrived example to make a point, rather than burrowing into arcane details of actual applications.

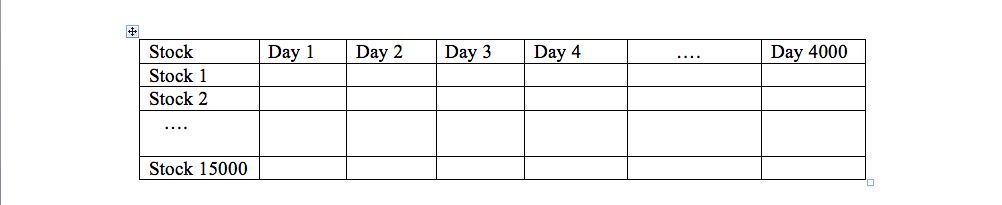

Consider the specification of an electronic trading system on Wall Street. The data available is, say, the closing stock price for every publicly traded U.S. stock for the last 20 years. This is the Stock array noted in Figure 1. There seem to be about 15,000 U.S. stocks and 4,000 trading days, so this array has 60M cells. If we move to hourly data, the array expands by an order of magnitude, while non-US stocks would further increase the volume by a factor of 4. Tick-level data or inclusion of options will send data volume through the roof (i.e., this array quickly becomes "big data").

A Data Set on Stock Prices

Figure 1

An analyst would natural start his or her exploration with ascertaining if pairs of interesting stocks, say Oracle and IBM, were correlated. One possible calculation is the covariance of the two times series shown in Figure 2.

(1/ 3999) * Sum {Oracle[i] – avg (Oracle)} * {IBM [i] – avg (IBM)}

Covariance Calculation

Figure 2

Put differently, subtract off the mean of both time series, multiply the vectors and divide by the length of the vectors minus 1. Other possible metrics of interest would be the correlation coefficient and the difference in variance of the two time series. Now, suppose we want to compute an all-pairs covariance. In other words, find the covariance between all pairs of 15,000 stocks, yielding a desired 15,000 x 15,000 result. Ignoring the constant term and ignoring subtracting the average, we get the following calculation:

Stock * Transpose (Stock)

Hence, covariance is a matrix calculation on the Stock matrix of Figure 1 that is solved using matrix multiply and matrix transpose. Most complex analytics (linear regression, data clustering, feature detection, machine learning, etc.) have the same characteristic; they are matrix calculations and are expressed as collections of linear algebra operations.

As near as I can tell, the requirements for complex analytics on big data are to mix data management operations (e.g., subset the stocks to those with more than $1B market capitalization, or those in the technology sector) and linear algebra.

I can see the following possible approaches to big analytics on big data:

Option 1: Use a statistics package such as R, S, SAS, SPSS, etc. Such a package will perform the linear algebra, but data management features are weak-to-non-existent. In addition, some packages won’t scale to multiple nodes or to data that does not fit in main memory. Hence, this option solves a piece of the problem, and may or may not scale.

Option 2: Use an RDBMS. Obviously, SQL is rich in data management but contains no linear algebra operations. However, some linear algebra calculations can be simulated in SQL. For example, consider a relational simulation of the Stock array as the following table:

Array-sim (price, stock-id, day#)

The matrix multiplication of Stock times its transpose can be simulated as:

Select A1.stock-id, A2.stock-id, covar = sum (A1.price * A2.price)

From Array-sim A1, A2,

Where A1. Day#1 = A2.day#

Group_by A1.stock-id, A2.Stock-id

Linear Algebra in SQL

Figure 3

For sparse matrices, this simulation may be quite efficient. However, stock is a dense matrix, and the above computation will be very pokey. Two problems emerge. First, the self-join with two group_by’s will be a complex query, and the natural clustering found in native arrays will not be present in Array-sim. Second there will be (15000) *(15000) *(4000) pairs of floating point multiply and add calculations. A colleague of mine reported that there is a 10 ** 5 difference between expressing a matrix multiply in Python (the slowest) and vectorized C (the fastest) when all operands are in main memory. Performing significant floating point operations in SQL is likely to be at the Python end of the scale. Hence, relational simulations of linear algebra operations are likely to result in poor I/O and CPU performance.

In addition, the simulation is not easy to figure out. Hence maintenance of the program containing the code in Figure 3 will likely be expensive. Moreover, SQL simulations become problematic for linear algebra operations that entail loops or convergence tests, since they require indefinite iteration. These require writing stored procedures or application logic.

Option 3: Use both option 1 and 2 together. In this case we use each system for what it is good for. However, the user must learn two systems and copy the world back and forth. Although this option is inconvenient, it is very widely used in today’s marketplace.

Option 4: Use extended RDBMS features. Because of the obvious performance problems with Option 2, several vendors have announced user-defined functions inside the data manager that either implement certain matrix operations or call R to perform the operations. In other words, vendors are starting to make option 3) less painful. However, one should carefully note that some vendors offer no intra-command parallelism for matrix operations. In other words, the linear algebra will not scale to multiple nodes. Others implement only a select few computations. Hence, a prospective user would be wise to benchmark the performance, scalability and completeness of any vendor package he might consider using.

Option 5: Use an array DBMS, such as Rasdaman or SciDB. These systems support array operations natively. Hence, data management and analytics are done in a single system on data in the same data model. Again, one should carefully check the performance, scalability, and completeness of the analytics in any system he is considering using. This option is certainly the most natural, and may well be the most performant, since no simulation is involved. However, users must learn a new query language with somewhat different semantics from SQL.

I draw four conclusions from this discussion. First, I think complex analytics will increase dramatically in importance as data mining and other complex tasks increase in importance. This shift will be driven by complex analytics problems with dramatic economic value such as recommendation engines, ad placement, and targeted customer segmentation for various purposes. Hence, the world will shift away from the simple analytics in traditional business intelligence systems to more complex analytics.

Second, enterprises will have to upgrade the skill set of their business analysts. Instead of merely running current business intelligence tools, they will need to become facile in statistical operations. This will be a non-trivial talent upgrade. One enterprise manager that I talked to said this will be difficult and could require personnel turnover. One way to mitigate this issue is to use "overkill analytics." People advocating this approach suggest running simpler analytics on more data to achieve a given result. In other words, the analyst can learn simpler statistical techniques rather than more complex ones. How enterprises cope with this transition to complex analytics will be interesting to watch.

Third, I think the complex analytics market is in its infancy, and which kind of system will ultimately win is an open question. In the meantime, expect a huge amount of marketing "fud" from the larger DBMS players.

Lastly, notice that I have not included Hadoop as an option. Since complex analytics are not "embarrassingly parallel," Hadoop will suffer significant performance problems. Hence, I do not consider this a reasonable Hadoop use case.

Disclosure

In addition to being an adjunct professor at the Massachusetts Institute of Technology, Michael Stonebraker is associated with four startups that are either producers or consumers of database technology.

No entries found