Communications of the ACM

The Waves of Publication

As I write this article, an earlier installation from this blog, about nastiness in computer science, has just been republished in the Communications of the ACM (the actual journal) and has triggered a plethora of new reader comments, showing how sensitive the community is to the state of our publication culture. Indeed most of my recent posts have been about publication. This was supposed to be a software engineering blog, and I do intend to return to software engineering; after all software development is what I do. Before that, however, there is more to say about publication; in particular, how much the very concept has changed, half of its traditional meaning having disappeared in hardly more than a decade. Or to put it differently (if you will accept the metaphor, explained below), how it has lost its duality: no longer particle, just wave.

Process and product

Some words ending with ation (atio in Latin) describe a change of state: restoration, dilatation. Others describe the state itself, or one of its artifacts: domination, fascination. And yet others play both roles: decoration can denote either the process of embellishing (she works in interior decoration), or an element of the resulting embellishment (Christmas tree decoration).

Since at least Gutenberg, publication has belonged to that last category: both process and artifact. A publication is an artifact, such as an article or a book accessible to a community of readers. We are referring to that view when we say "she has a long publication list or "Communications of the ACM is a prestigious publication." But the word also denotes a process, built from the verb "publish" the same way "restoration" is built from "restore" and "insemination" from "inseminate": the publication of her latest book took six months.

The thesis of this article is that the second view of publication will soon be gone, and its purpose is to discuss the consequences for scientists.

Let me restrict the scope: I am only discussing scientific publication, and more specifically the scientific article. The situation for books is less clear; for all the attraction of the Kindle and other tablets, the traditional paper book still has many advantages and it would be risky to talk about its demise. For the standard scholarly article, however, electronic media and the web are quickly destroying the traditional setup.

That was then . . .

Let us step back a bit to what publication, the process, was a couple of decades ago. When you wrote something, you could send it by post to your friends (Edsger Dijkstra famously turned this idea into his modus operandi, regularly xeroxing his "EWD" memos [1] to a few dozen people) , but if you wanted to make it known to the world you had to go through the intermediation of a PUBLISHER — the mere word was enough to overwhelm you with awe. That publisher, either a non-profit organization or a commercial house, was in charge not only of selecting papers for a conference or journal but of bringing the accepted ones to light. Once you got the paper accepted began a long and tedious process of preparing the text to the publisher's specifications and correcting successive versions of "galley proofs." That step could be painful for papers having to do with programming, since in the early days typesetters had no idea how to lay out code. A few months or a couple of years later, you received a package in the mail and proudly opened the journal or proceedings at the page where YOUR article appeared. You would also, usually for a fee, receive fifty or so separately printed (tirés à part) reprints of just your article, typeset the same way but more modestly bound. Ah, the discrete charm of 20-th century publication!

. . . and this is now

Cut to today. Publishers stopped long ago to do the typesetting for you. They impose the format, obligingly give you LaTex, Word or FrameMaker templates, and you take care of everything. We have moved to WYSIWYG publishing: the version you write is the version you submit through a site such as EasyChair or CyberChair and the version that, after correction, will be published. The middlemen have been cut out.

We moved to this system because technology made it possible, and also because of the irresistible lure, for publishers, of saving money (even if, in the long term, they may have removed some of the very reasons for their existence). The consequences of this change go, however, far beyond money.

Integrating change

To understand how fundamentally the stage has changed, let us go back for a moment to the old system. It has many advantages, but also limitations. Some are obvious, such as the amount of work required, involving several people, and the delay from paper completion to paper publication. But in my view the most significant drawback has to do with managing change. If after publication you find a mistake, you must convince the journal to include an erratum: a new mini-article, subject to the same process. That requirement is reasonable enough but the scheme does not support a significant mode of scientific writing: working repeatedly on a single article and progressively refining it. This is not the "LPU" (Least Publishable Unit) style of publishing, but a process of studying an important idea or research project and aiming towards the ideal paper about it by successive approximation. If six months after the original publication of an article you have learned more about the topic and how to present it, the publication strategy is not obvious: resubmit it and risk being accused of self-plagiarism; avoid repetition of basic elements, making the article harder to read independently; artificially increase differences. This conundrum is one of the legitimate sources of the LPU phenomenon: faced with the choice between freezing material and repeating it, people end up publishing it bit by bit.

Now back again to today. If you are a researcher, you want the world to know about your ideas as soon as they are in a clean form. Today you can do this easily: no need to photocopy page after page and lick postage stamps on envelopes the way Dijkstra did; just generate a PDF and put it on your Web page or (to help establish a record if a question of precedence later comes up) on ArXiv. Just to make sure no one misses the information, tweet about it and announce it on your Facebook and LinkedIn pages. Some authors do this once the paper has been accepted, but many start earlier, at the time of submission or even before. I should say here that not all disciplines allow such author behavior; in biology and medicine in particular publishers appear to limit authors’ rights to distribute their own texts. Computer scientists would not tolerate such restrictions, and publishers, whether nonprofit or commercial, largely leave us alone when we make our work available on the Web.

But we are talking about far more than copyright and permissions (in this article I am in any case staying out of these emotionally and politically charged issues, open access and the like, and concentrating on the effect of technology changes on the process of publishing and the publication culture). The very notion of publication has changed. The process part is gone; only the result remains, and that result can be an evolving product, not a frozen artifact.

Particle, or wave?

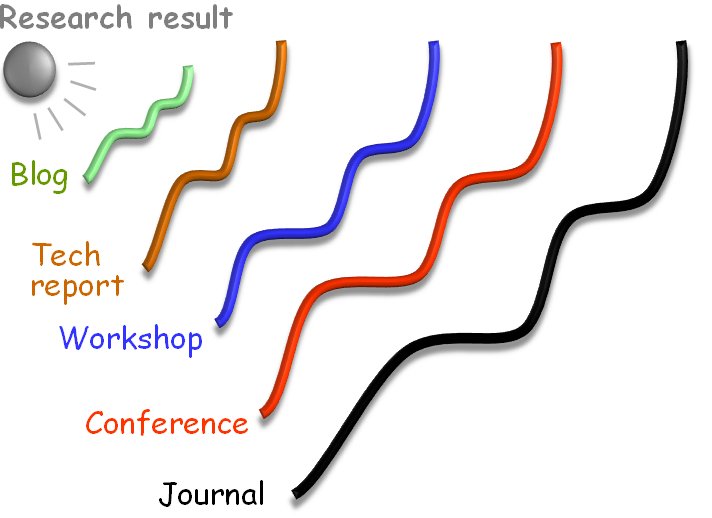

Another way to describe the difference is that a traditional publication, for example an article published in a journal, is like a particle: an identifiable material object. With the ease of modification, a publication becomes more like a wave, which allows an initial presentation to propagate to successively wider groups of readers:

Maybe you start with a blog entry, then you register the first version of the work as a technical report in your institution or on ArXiv, then you submit it to a workshop, then to a conference, then a version of record in a journal.

In the traditional world of publication each of these would have to be made sufficiently different to avoid the accusation of plagiarism. (There is some tolerance, for example a technical report is usually not considered prior publication, and it is common to submit an extended version of a conference paper to a journal — but the journal will require that you include enough new material, typically "at least 30%.")

For people who like to polish their work repeatedly, that traditional model is increasingly hard to accept. If you find an error, or a better way to express something, or a complementary result, you just itch to make the change here and now. And you can. Not on a publisher's site, but on your own, or on ArXiv. After all, one of the epochal contributions of computer technology, not heralded loudly enough, is, as I argued in another blog article [2], the ease with which we can change, extend and refine our creations, developing like a Beethoven and releasing like a Mozart.

The "publication as product" becomes an evolving product, available at every step as a snapshot of the current state. This does not mean that you can cover up your mistakes with impunity: archival sites use "diff" techniques to maintain a dated record of successive versions, so that in case of doubt, or of a dispute over precedence, one can assess beyond doubt who released what statement when. But you can make sure that at any time the current version is the one you like best. Often, it is better than the official version on the conference or journal site, which remains frozen forever like it was on the day of its release.

What then remains of "publication as process"? Not much; in the end, a mere drag-and-drop from the work folder to the publication folder.

Well, there is an aspect I have not mentioned yet.

The sanction

Apart from its material side, now gone or soon to be gone, the traditional publication process has another role: what a recent article in this blog [3] called sanction. You want to publish your latest scientific article in Communications of the ACM not just because it will end up being printed and mailed, but because acceptance is a mark of recognition by experts. There is a whole gradation of prestige, well known to researchers in every particular field: conferences are better than workshops, some journals are as good as conferences or higher, some conferences are far more prestigious than others, and so on.

That sanction, that need for an independent stamp of approval, will remain (and, for academics, young academics in particular, is of ever growing importance). But now it can be completely separated from the publication process and largely separated (in computer science, where conferences are so important today) from the conference process.

Here then is what I think scientific publication will become. The researcher (the author) will largely be in control of his or her own text as it goes through the successive waves described above. A certified record will be available to verify that at time t the document d had the content c. Then at specific stages the author will submit the paper. Submit in the sense of appraisal and, if the appraisal is succesful, certification. The submission may be to a conference: you submit your paper for presentation at this year's ICSE, POPL or SIGGRAPH. (At the recent Dagstuhl publication culture workshop, Nicolas Hozschuch mentioned that some graphics conferences accept for presentation work that has already been published; isn't this scheme more reasonable than the currently dominant practice of conference-as-publication?) You may also submit your work, once it reaches full maturity, to a journal. Acceptance does not have to mean that any trees get cut, that any ink gets spread, or even that any bits get moved: it simply enables the journal's site to point to the article, and your site to add this mark of recognition.

There may also be other forms of recognition, social-network or Trip Advisor style: the community gets to pitch in, comment and assess. Don't laugh too soon. Sure, scientific publication has higher standards than Wikipedia, and will not let the wisdom of the crowds replace the judgment of experts. But sometimes you want to publish for communication, not sanction, and especially if you have the privilege of no longer being trapped in the publish-or-perish race you simply want to make your research known, and you have little patience for navigating the meanders of conventional publication, genuflecting to the publications of PC members, and following the idiosyncratic conventional structure of the chosen conference community. Then you just publish and let the world decide.

In most cases, of course, we do need the sanction, but there is no absolute reason it should be tied to the traditional structures of journal publication and conference participation. There will be resistance, if only because of the economic interests involved; some of what we know today will remain, albeit with a different focus: conferences, as a place where the best work of the moment is presented (independently of its publication); printed books, as noted; and printed journals that bring real added value in the form of high-quality printing, layout and copy editing (and might still insist that you put on their site a copy of your paper rather than, or in addition to, a reference to your working version).

The trend, however, is irresistible. Publication is no longer a process, it is a product, increasingly under the control of the authors. As a product it is no longer a defined particle but a wave, progressively improving as it reaches successive classes of readership, undergoes successive steps of refinement and receives, informally from the community and officially from more or less prestigious sources, successive stamps of approval.

References

[1] Dijkstra archive at the University of Texas at Austin, here.

[2] Bertrand Meyer: Computer Technology: Making Mozzies out of Betties, blog article, 2 August 2009, available here.

[3] Bertrand Meyer: Conferences: Publication, Communication, Sanction, article on this blog, 10 January 2013, available here.

Comments

Anonymous

Dear Dr.Meyer! February 12, 2013

Your article The Waves of Publication is not the first on the subject and definitely not the last. Each author writes at his own level; yours is more interesting to read than many others and it is an expected thing that a professor from ETH would mention the duality even writing about such a far away thing as the publication process.

However, for a scientist of your level it's a bit strange to write about the symptoms but not to write about the disease. I am absolutely sure that you are aware of the problem and the main cause of the current situation but, as many others, you don't want to mention it. You write with the excellent knowledge of details about many aspects but carefully avoid mentioning that everything started to go wrong when the number of publications became the only and the main estimation of the scientists' level.

Dijkstra sent his memos when he had new ideas which he wanted to discuss; those were not intended for increasing the number of publications mentioned in his CV.

Scientific achievements can be measured only on a qualitative level: an author of new theory XXX, an inventor of new device YYY, or an algorithm ZZZ. In the majority of cases it would be more correct to say that a person made a significant contribution to the existing theory. A qualitative estimation needs more time and efforts, but an attempt to substitute it with some strange quantitative mark (simply a number) has the same sense as the estimation of the patient's condition by an average temperature throughout a hospital. If the number of papers became the main estimation, then why to cry about the quality of those papers; if the quality is not included into estimation then it doesn't matter at all. And that's exactly what we have now. If the rules are changed so drastically, then it's another game. It's not any more about the life achievements; it's about the number of publications per year.

Decades ago the papers were published to show the new results; so the majority of articles contained one new idea (rarely more than one) per article During one generation of professors an average number of publications mentioned in CV increased by the ratio of 2 2.5. But people are the same, so an average number of ideas per professor is still the same. You can't publish one article with idea and the next one without anything (by a simple comparison the worse one would be not allowed) and you can't publish half an idea in each one, so the solution was obvious and simple: there are no ideas in both. They are on the same level and all of them are published (you wrote about the whole mechanism). The consequences are simple: there are a lot of publications and nearly all of them contain nothing except bla bla bla. My estimation is that now I find really interesting articles on which you have to think and which can push you forward in your own research with a frequency of once in three four years. There are still people who can think perfectly and who care about their publications!

If we continue to look at the number of publications as the main estimation of scientists' achievements, then any steps to improve the quality of papers will be useless.

With best regards,

Sergey Andreyev

CACM Administrator

(To Sergey Andreyev.) Thanks for your kind comments. You make some good points but you seem to be responding to a different article. While I have made critical comments about the CS publication culture elsewhere, this one is descriptive rather than judgmental and is about something else. You state that I "write about the symptoms but not to write about the disease"; the present article, however, does not describe any "disease". It explains, non-judgmentally, the changing nature of publication, which follows from technological evolution. The ability to publish an article without printing it and even without going through a publisher is not a disease; it is simply the way things are now.

Although not directly related to my article, your point is interesting. David Parnas ("Stop the numbers game", CACM Nov. 2007, http://dl.acm.org/citation.cfm?id=1297815) has already criticized the counting of publications. But in no serious university that I know in Europe, North America, Australia etc. does any committee judge candidates by publication counts. If they use metrics altogether (which good universities only do, if at all, as a complement to other, non-quantitative criteria), they will consider citation counts, which are subject to criticism as well but much more reasonable since they correlate to impact, whereas publication counts only reflect activity. I know that in some countries (other than the ones mentioned above) publication counts are still used, but this will never happen in a top research institution.

Even the race for publication counts is not the reason, you are right that the community publishes too much in LPU style (Least Publishable Unit). As Clemens Szyperski once told me, "it used to be that you worked a year or two on an article, then published it". Now it's more submit, submit, submit.

-- Bertrand Meyer

Anonymous

Dear Dr.Meyer!

I especially like the situation when an opponent uses arguments which strengthen my point of view. I also prefer to base my point of view on the numbers.

Three years ago I was looking through a series of articles on some aspects of user interface design and started (in parallel) to look at the personal web-pages of their authors. Just for better understanding of where the work was done and how it was related to other results. The authors were highly respected scientists from the leading universities of North America and Europe and the majority has worked on both sides of Atlantic. I saw with a big surprise (I have to admit that I was simply a fool not knowing it before) that as a rule an assistant professor at the age of 35 years has now between 100 and 120 publications. If six months after the original publication of an article you have learned more about the topic With the unbelievable precision your estimation of the process 20 or 30 years ago correlates with my estimations because I wrote not once that an average number of publications for the previous generation of professors at the end of very successful career was 75 80. Those prolific researchers of now days will have something like 250 300 or more at the time when they will be included into some Hall of Fame.

There is only one BUT Not long ago Robert Cecil Martin mentioned in his article (http://blog.8thlight.com/uncle-bob/2012/12/19/Three-Paradigms.html) three main ideas that changed the world of programming. It happened so that the latest of them was announced by Edsger Dijkstra in 1968. Only 45 years ago

My numbers from those web-pages cannot be used as a base for a serious statistical analysis. It was a small sampling, but I have a feeling that the sampling on the wider basis will give similar results. I hear not for the first time that no serious universities from Europe, North America, and Australia judge candidates by publication counts. Only I could never get any explanation why all those professors, instead of spending their time on the real work, submit, submit, submit if the number of publications does not play any role. Are they all crazy or is there something else?

I like your idea about particles and waves only I would interpret these words in a slightly different way. Years ago it was a particle a single publication with a new idea. You could read about it, think about it, compare it with your own results, and decide either to use or reject. Now the idea, if there is any, is dissolved in the waves of words and is dispersed. There are definitely waves; the infinitive waves of publications. They are mostly as empty as the areas of Pacific Ocean. Waves after waves without anything special in any of them. The main goal of the whole process is waves and not the ideas.

I hope that my respond is not to the different article.

Best wishes

Sergey Andreyev

Displaying all 3 comments