Communications of the ACM

Small-Group Code Reviews For Education

Code reviews are essential in professional software development. When I worked at Google, every line of code I wrote had to be reviewed by several experienced colleagues before getting committed into the central code repository. The primary stated benefit of code review in industry is improving software quality, but an important secondary benefit is education. Code reviews teach programmers how to write elegant and idiomatic code using a particular language, library, or framework within their given organization. It provides experts with a channel to pass their domain-specific knowledge onto novices ... knowledge that can't easily be captured in a textbook or instruction manual.

Since I've now returned to academia, I've been thinking a lot about how to adapt best practices from industry into my research and teaching. I've been spending the past year as a postdoc in Rob Miller's group at MIT CSAIL and witnessed him deploying some ideas along these lines. For instance, one of his research group's projects, Caesar, scales up code review to classes of a few hundred students.

In this article, I want to describe a lightweight method that Rob developed for real-time, small-group code reviews in an educational setting. I can't claim any credit for this idea; I'm just the messenger :)

Small-group code reviews for education

Imagine you're a professor training a group of students to get up-to-speed on specific programming skills for their research or class projects.

Ideally, you'd spend lots of one-on-one time with each student, but obviously that doesn't scale. You could also pair them up with senior grad students or postdocs as mentors, but that still doesn't scale well. And you now need to keep both mentors and mentees motivated enough to schedule regular meetings with one another.

Here's another approach that Rob has been trying: Use regular group meeting times, where everyone is present anyways, to do real-time, small-group code reviews. Here's the basic protocol for a 30-minute session:

- Gather together in a small group of 4 students plus 1 facilitator (e.g., a professor, TA, or senior student mentor). Everyone brings their laptop and sits next to one another.

- The facilitator starts a blank Google Doc and shares it with everyone. The easiest way is to click the "Share" button, set permissions to "Anyone who has the link can edit," and then send the link to everyone in an email or as a ShoutKey shortened URL. This way, people can edit the document without logging in with a Google account.

- Each student claims a page in the Google Doc, writes their name on it, and then pastes in one page of code that they've recently written. They can pass the code through an HTML colorizer to get syntax highlighting. There's no hard and fast rule about what code everyone should paste in, but ideally it should be about a page long and demonstrate some non-trivial functionality. To save time, this setup can be done offline before the session begins.

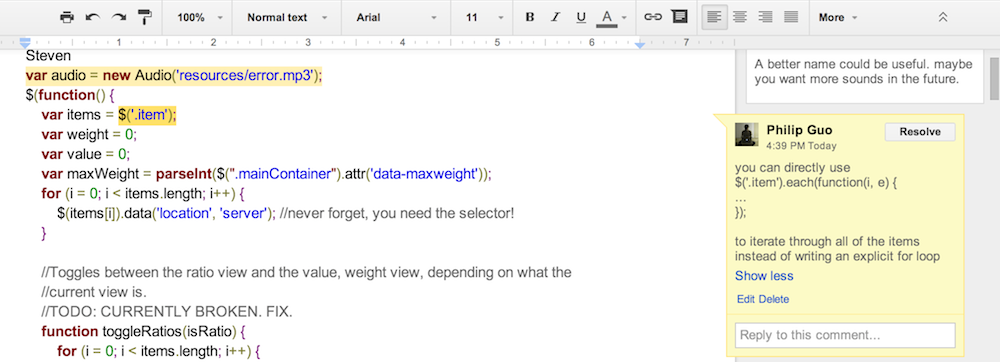

- Start by reviewing the first student's code. The student first gives a super-quick description of their code (< 30 seconds) and then everyone reviews it in silence for the next 4 minutes. To review the code, simply highlight portions of text in the Google Doc and add comments using the "Insert comment" feature. Nobody should be talking during these 4 minutes.

- After time is up, spend 3 minutes going around the group and having each student talk about their most interesting comment. This is a time for semi-structured discussion, organized by the facilitator. The magic happens when students start engaging with one another and having "A HA! I learned something cool!" moments, and the facilitator can more or less get out of the way.

- Now move onto the next student and repeat. With 4 students each taking ~7.5 minutes, the session can fit within a 30-minute time slot. To maintain fairness, the facilitator should keep track of time and cut people off when time expires. If students want to continue discussing after the session ends, then they can hang around afterward.

That's it! It's such a simple, lightweight activity. But it's worked remarkably well so far for training undergraduate researchers in our group.

Benefits

Lots of pragmatic learning occurs during our real-time code reviews. The screenshot above shows one of my comments today about how the student can replace an explicit JavaScript for-loop with a jQuery .each() function call. This is exactly the sort of knowledge that's best learned at a code review rather than by, say, reading a giant book on jQuery. Also, note that another student commented about improving the name of the "audio" variable. A highly motivating benefit is that students learn a lot even when they are reviewing someone else's code, not just when their own code is being reviewed.

With a 4-to-1 student-to-facilitator ratio, this activity scales well in a research group or small project-based class. This group size is well-suited for serendipitous, semi-impromptu discussions and eliminates the intimidation factor of sitting one-on-one with a professor. But at the same time, it isn't large enough for students feel anonymous and tune out like they would in a classroom. Since everyone sees who else is commenting on the code, there's some social pressure to participate rather than zoning out.

A side benefit of holding regular code reviews is that it forces students to make consistent progress on their projects so that they have new code to show during each session. This level of added accountability can keep momentum going strong on research projects since, unlike industry projects, there are no market-driven shipping goals to motivate daily progress. At the same time, it's important not to make this activity seem like a burdensome chore, since that might actually drain student motivation.

Comparing with industry code reviews

Industry code reviews are often asynchronous and offline, but ours happen in a real-time, face-to-face setting. This way, everyone can ask and answer clarifying questions on-the-spot. Everything happens within a concentrated 30-minute time span, so everyone maintains a shared context without getting distracted by other tasks.

Also, industry code reviews are often summative assessments where your code can be accepted only if your peers give it a good enough "grade." This policy makes sense when everyone is contributing to the same production code base and wants to keep quality high. But here, reviews are purely formative so students understand that they won't be "graded" on it. Rather, they understand that the activity is being done purely for their own educational benefit.

Parting thoughts

When running this activity, you might hit the following snags:

- Overly quiet students. It usually takes students a few sessions before they feel comfortable giving and receiving critique from their peers. It's the facilitator's job to draw out the quieter students and to show that everyone is there to help, not to put one another down. Also, not all comments need to be critiques; praises and questions are also useful. I've found that even the shyest students eventually open up when they see the value of this activity.

- Running over time. Sometimes when a discussion starts to get really interesting, it's tempting to continue beyond the 3-minute time limit. However, that might make other students feel left out since their code didn't receive as much attention from the group. And it risks running the meeting over the allotted time. It's the facilitator's job to keep everyone on schedule.

- Lack of context. If each student is working on their own project, it might be hard to understand what someone else's code does simply by reading it for a few minutes. Thus, student comments might tend toward the superficial (e.g., "put an extra space here for clarity"). In that case, the facilitator should step in to provide some deeper, more substantive comments. Another idea is to tell students to ask a question in their code review if they can't think of a meaningful critique. That way, at least they get to learn something new as a reviewer.

Try running some real-time code reviews at your next group or class meeting.

No entries found