Communications of the ACM

Budget Beowulf Clusters

Joel Adams, Calvin College

At SIGCSE 2015, five CS educators brought and live-demo'd Beowulf clusters costing from $200 to $2000.

Back in 2006, then-student Tim Brom and I attended a conference where we saw Charlie Peck of Earlham College live-demo LittleFe, a 6-node Beowulf cluster that was small enough to fit in a suitcase and be taken to a conference. Inspired by what we had seen, I designed and worked with Tim to build Microwulf, a four-node "personal" Beowulf cluster that produced over 26 GFLOPs of sustained performance for less than $2,500. By mid-2007, the component prices had decreased so that one could build the same system for under $1,300. In the months and years that followed, I heard from hundreds of people who were interested in building their own small Beowulf clusters.

Fast-forward to summer 2014, when I kept crossing paths with people who had built small Beowulf clusters using inexpensive system-on-a-board (SoaB) computers for the compute nodes. (The $35 Raspberry Pi is probably the best-known SoaB computer, but as you'll see, there are many others to choose from.)

What really struck me was that no two of these people had made the same design choices – each person had chosen different SoaB computers for their clusters. It occurred to me that it would be extremely interesting to get all of these people together in the same room.

To accomplish this, I organized a Special Session at SIGCSE 2015 titled Budget Beowulfs: A Showcase of Inexpensive Clusters for Teaching Parallel and Distributed Computing. Five different people brought their clusters to the session and assembled them at the front of the room, for inspection by one another and those attending the session.

The session was organized into three parts:

- 25 minutes of short lightning talks in which each cluster designer gave an overview of their cluster and how they are using it to teach PDC.

- 25 minutes of general Q&A between the audience and the presenters in a panel format.

- 25 minutes of "Show & Tell" between the audience and the presenters in a poster-session format, but with actual clusters instead of posters.

Here are descriptions and photos of the clusters from the session. Each picture may be clicked on for a larger view.

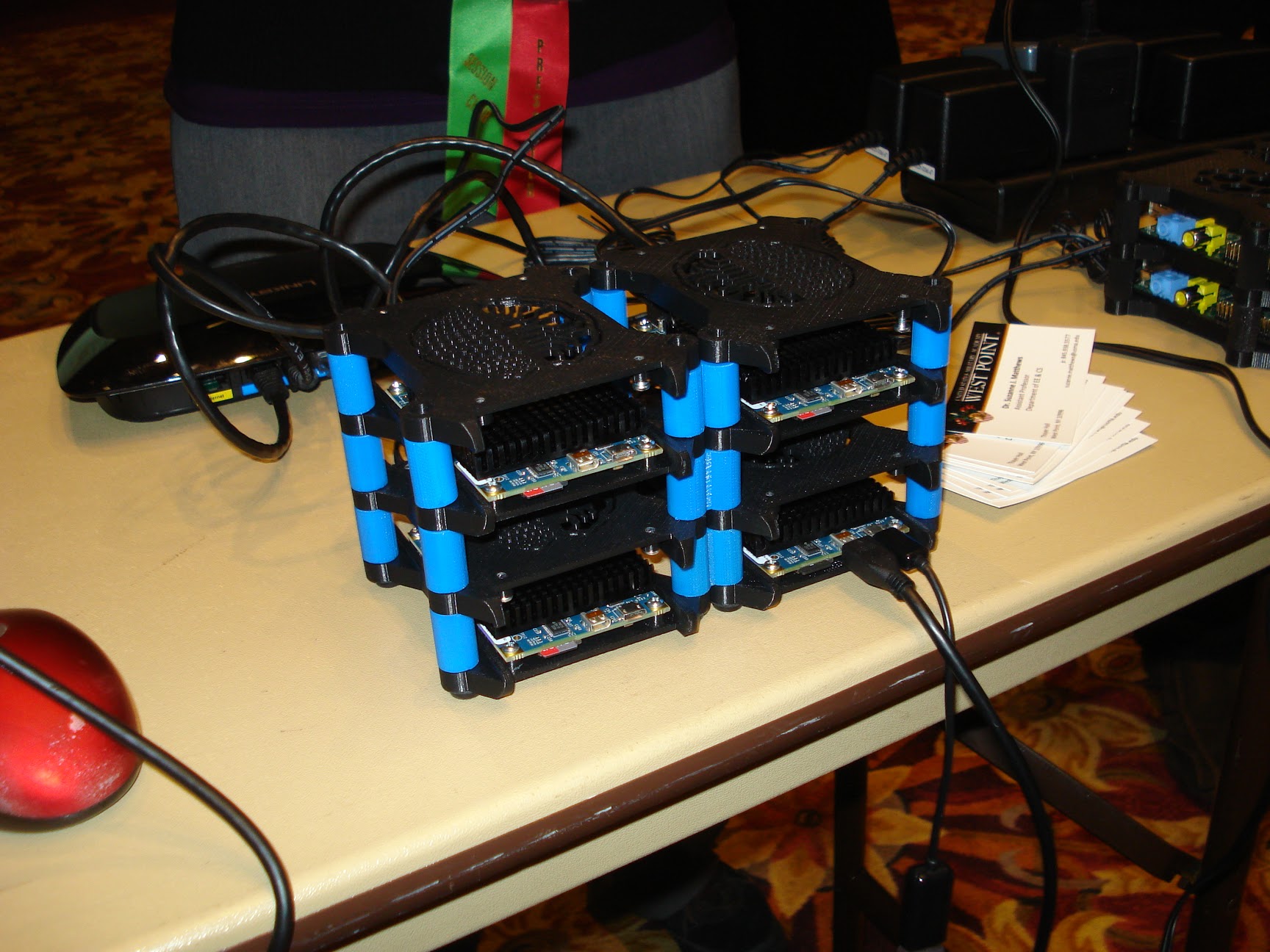

1. StudentParallella is a cluster built by Dr. Suzanne Matthews from the U.S. Military Academy. Her cluster has four nodes, each with an Adapteva Parallella SoaB computer. Each Parallella has an ARM A9 dual-core CPU plus a 16-core Epiphany co-processor, giving the cluster a total of 72 cores. The nodes are connected using Gigabit Ethernet. In the photos below, the nodes are in cases that Dr. Matthews designed and printed using a 3D printer. Total cost: $650.

2. PisToGo is a "cluster in a briefcase" built by Jacob Caswell, a sophomore CS and Physics major at St. Olaf College. His cluster has five nodes, each with a Raspberry Pi 2 SoaB computer, mounted on a custom-built heat sink. Each "Pi 2" has an ARM A7 quad-core processor, giving the cluster a total of 20 cores. The nodes are connected using Fast Ethernet. The photos below show the briefcase that Jacob purchased and outfitted with a screen, keyboard, LCD display, venting, and a custom heatsink. The result resembled a device from a James Bond movie, for a total cost of $300.

3. HSC-1 and HSC-2 are two 2-node "half shoebox clusters" built by Dr. David Toth of Centre College. As you might guess, each of these clusters is about half the size of a shoebox – small enough to fit in a modest Tupperware container.

HSC-1 uses two CubieBoard2 SoaB computers as its nodes. Each CubieBoard2 has an ARM A7 dual-core CPU for a total of four cores.

HSC-2 uses two ODROID-O3 SoaB computers as its nodes. Each ODROID-O3 has an ARM A9 quad-core CPU for a total of eight cores.

Both clusters use Fast Ethernet for their interconnect, and each costs about $200, making them inexpensive enough that, for a $200 lab fee, each student can build their own, carry it (in its Tupperware container) in their backpack, and use it throughout a course. For example, students in a Computer Organization course might build such a cluster and then use it for hands-on exercises that explore machine-level (ARM assembly language), single-threaded, shared-memory parallel, and/or distributed memory parallel computing.

4. Rosie is a six-node cluster built by Dr. Elizabeth Shoop from Macalester College. Each node is an Nvidia Jetson TK-1 SoaB computer, which has an ARM A15 quad-core CPU, plus a Kepler GPU with 192 CUDA cores, giving the cluster 24 normal cores and 1152 CUDA cores. The cluster uses Gigabit Ethernet as it interconnect. With a keyboard, display, and Pelican case, the total cost was $1350.

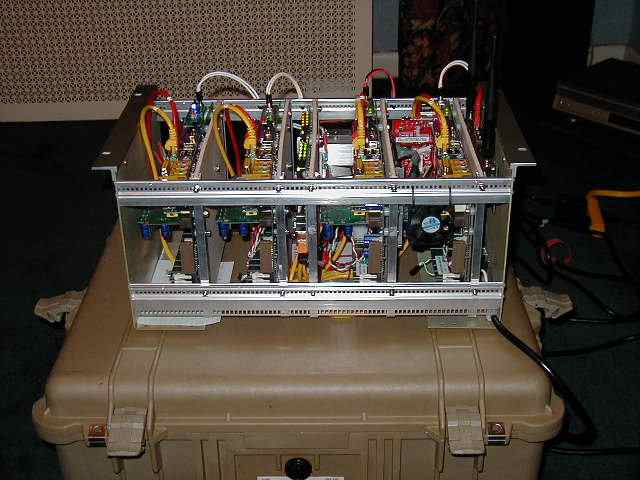

5. As mentioned previously, LittleFe is a six-node cluster built by Dr. Charlie Peck (and others) from Earlham College. LittleFe is the most venerable of these clusters, having evolved through several generations. Now in its 4th generation, each node has an ASRock mainboard with an Intel Celeron J1900 quad-core CPU that has integrated Intel HD Graphics, providing four SIMD execution units (EUs), for a total of 24 normal cores and 24 EUs. The cluster uses Gigabit Ethernet as its interconnect, and includes a keyboard, display, and Pelican case, bringing the total cost to about $2,000 (but free to those who attend a "LittleFe Buildout", where participants assemble and take home their own LittleFe).

The presenters arrived and began assembling their clusters about 90 minutes before the session began. Most had never met the others before, and enjoyed dialoguing with peers who had wrestled with similar design issues but come to different decisions. There was a healthy exchange of ideas as each looked over each the others’ creations and realized how each design reflected different priorities on the part of its designer. All had interesting stories to share about the reactions of TSA officers as they took their creations through airport security.

By the time the audience arrived, there was a palpable energy in the room. The first 25 minutes flew by as the presenters gave their lightning talks and introduced the audience to their clusters.

Likewise, the second 25 minutes flew by as the audience members peppered the panel with questions. The question that generated the most discussion was,

In a course on parallel computing, which should be taught first: MPI (distributed-memory multiprocessing) or OpenMP (shared-memory multithreading)?

The four professors on the panel were evenly split on the question. Two argued that starting with message-passing provides students with a cleaner introduction to parallel computing by deferring shared-memory’s data races until later. The other two argued that starting with multithreading provides students with a more natural transition (from sequential) to parallel computing. It was a lively debate, with no one yielding any ground and no clear winner.

The final 25 minutes also went by quickly, as the presenters moved from the panel to their clusters for the Show&Tell part of the session. Audience members circulated among them, asking questions, taking pictures, getting live demonstrations, and so on.

Being able to interact with so many different cluster designers in such close proximity was clearly inspiring to the attendees, many of whom indicated that they planned to design and build their own clusters in the near future. Look for a follow up session in a year or two!

The Budget Beowulfs Special Session was sponsored by CSinParallel, an NSF-funded project of Dick Brown [St. Olaf College], Libby Shoop [Macalester College], and myself [Calvin College]. All materials from this session are freely available at the CSinParallel website, including links to the different clusters’ websites, where disk images for the clusters’ nodes may be downloaded.

My thanks to Rick Ord and Ariel Ortiz Ramirez, who were kind enough to provide these photos of the session.

Until next time...

No entries found