Communications of the ACM

How Do We Make AI More Ethical?

Credit: AIES

Ethics underpins much of what we do, in both our professional and personal lives (at least, they should).

We see many definitions of ethics. The Merriam-Webster online dictionary defines it as “the discipline dealing with what is good and bad and with moral duty and obligation.” An article on the site of the Markkula Center for Applied Ethics at Santa Clara University says, “Ethics is two things. First, ethics refers to well-founded standards of right and wrong that prescribe what humans ought to do, usually in terms of rights, obligations, benefits to society, fairness, or specific virtues. …Secondly, ethics refers to the study and development of one's ethical standards.” A Psychology Today article offers, “To put it simply, ethics represents the moral code that guides a person's choices and behaviors throughout their life.”

ACM is very concerned about ethics. The organization spent two years updating its Code of Ethics and Professional Conduct before unveiling it in 2018. The Preamble to the updated Code states simply, “Computing professionals' actions change the world. To act responsibly, they should reflect upon the wider impacts of their work, consistently supporting the public good. The ACM Code of Ethics and Professional Conduct expresses the conscience of the profession.”

At the third AAAI/ACM conference on AI, Ethics and Society (AIES) earlier this month, representatives of academic institutions around the world came together to discuss the structural and external issues contributing to bias in artificial intelligence systems, and how that might be mitigated to a greater or lesser extent.

(A notable exception was participants from China, who could not attend as a result of the spread of the coronavirus/Covid-19. Conference organizers had emailed attendees a week before the event's kickoff “that a very large number of participants based in China will be prevented from traveling to the conference. We ask the broader community to step up to help them, presenting their papers for them if possible, or helping with a remote or video presentation, if necessary.”)

Still the event, organized by the American Association for Artificial Intelligence (AAAI), ACM, and the ACM Special Interest Group on Artificial Intelligence (SIGAI), went on in New York City, co-located with the 34th AAAI Conference on Artificial Intelligence, the 32nd Annual Conference on Innovative Applications of Artificial Intelligence (IAAI-20), and the Tenth Symposium on Educational Advances in Artificial Intelligence (EAAI-20).

There were keynotes on planning for “a Just AI Future,” on the development of ethical guidelines for algorithmic accountability, on the socialization of AI and its impacts on society, and the conflicting ethical considerations and governance approaches related to AI. There was even, during an evening reception, a performance of poetry and dance titled “Navigating Surveillant Lands.”

There also were many short sessions presented by students and/or their professors, on topics including fairness/the elimination of bias in AI, developing algorithmic transparency/having AI explain the rationale behind its decisions, and the impact of AI on the future of work.

In the session titled “Ethics under the Surface, ” Jeanna Matthews, an associate professor of computer science at Clarkson University, made a short presentation on behalf of her co-authors on the paper “When Trusted Black Boxes Don't Agree: Incentivizing Iterative Improvement and Accountability in Critical Software Systems.” She explained, “The big question that we're asking in our paper is, do sufficient incentives exist for flaws in the software to be identified and fixed? And we feel, absolutely no.”

As Matthews observed, we've all experienced a bug in software, with the expectation that the manufacturer eventually will fix it because nobody wants to buy buggy software; the market provides the incentive for the software to be fixed. However, she says that for products like the sentencing software used in the criminal justice system that has been found to be racially biased, “market forces really are utterly insufficient ” to remediate that built-in bias through debugging and iterative improvement; the software producers really have no incentive to do so.

On top of that, the internal working of some critical software products are protected as a trade secret, and there can be “hurdles in terms of terms of service clauses that make testing or publishing the results difficult,” Matthews said.

Matthews and her colleagues suggest that, whenever public money is used to purchase critical software systems, the purchasing agency should require internal documents like risk assessments and design documents, “and that isn't just even saying source code; source code, which would be even better.” She adds, “One of our big asks is that all of these systems should require scriptable interfaces. One of the big parts of our job has been to put testing harnesses around these (software products) so we can run tens of thousands of tests against them. There's no reason why all of these vendors couldn't provide a scriptable interface. They should be designed to be much more reasonably comparable, and that would be a great role for organizations like NIST. Requirements for validation studies to clearly specify the range of their testing, not All-or-Nothing validation. And things to reward and incentivize third-party testing. ”

Matthews said we need to add “the right incentives to make critical software responsive to the needs of individuals, to society, and to the law, and not just to the needs of customers, deciders, and developers. And we should really not be deploying critical software systems in an environment without a credible plan to incentivize iterative improvement.”

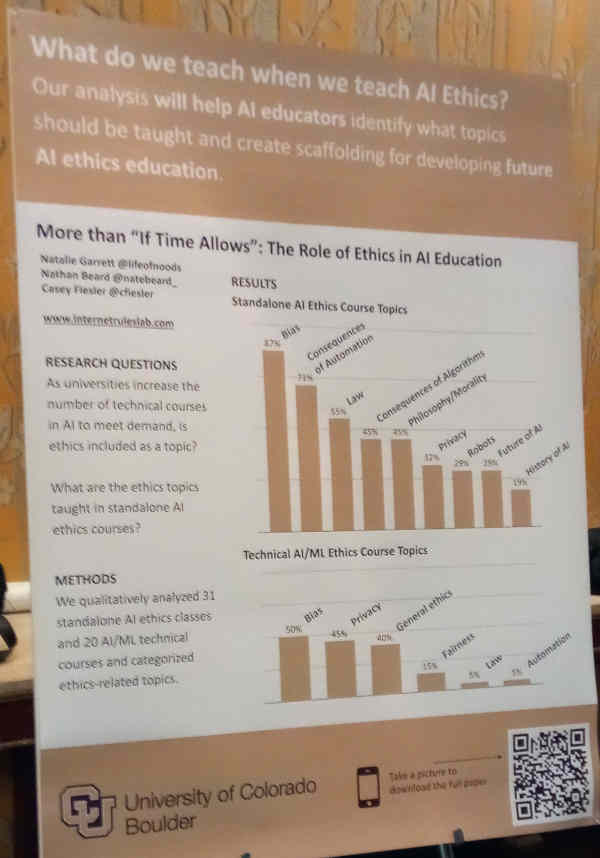

University of Colorado at Boulder Ph.D. student and ethics researcher Natalie Garrett and her colleagues wondered how ethics was being presented in courses on AI. For their paper “More Than 'If Time Allows': The Role of Ethics in AI Education," Garrett and her colleagues Nathan Beard, a Ph.D. student at the University of Maryland, and Casey Fiesler, an assistant professor in the departments of Information Science and Computer Science, at the University of Colorado at Boulder, “analyzed standalone AI ethics course, and also some technical machine learning and AI courses to understand what topics are being taught.”

Garrett said previous work by Fiesler had found “only about 12% of technical AI courses actually included ethics. So we wanted to look at standalone ethics and technical courses, and compare those two.” The result of their latest research, she said, “informs what ethics topics could be added to technical courses or as standalone AI ethics classes.”

She declined to discuss the results of their research, suggesting instead that conference attendees check it out on their poster, which you'll see below:

Lawrence M. Fisher is senior editor, news, for ACM Magazines.

No entries found