Communications of the ACM

Why Are There So Many Programming Languages?

I was once asked by a friend of mine back in the 1990's why there were so many programming languages. "Why wasn't there just one good programming language?" he asked. He was computer-savvy but not a developer, at least not a full-time one. I replied that programming languages are often designed for certain tasks or workloads in mind, and in that sense most languages differ less in what they make possible, and more in terms of what they make easy. That last part was a quote I half-remembered somebody else saying. It sounded smart and appropriate enough, but the truth was I really didn't really know why there were so many programming languages. The question remained stuck in my head.

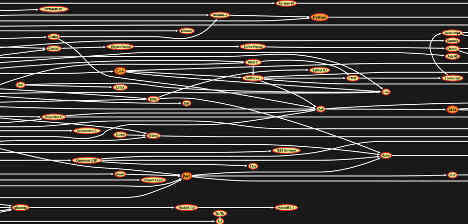

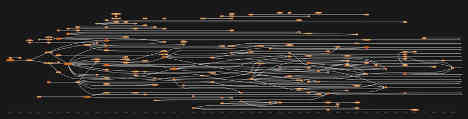

I had the opportunity to visit the Computer History Museum in Mountain View, CA, a few years ago. It's a terrific museum, and among the many exhibits is a wall-size graph of the evolution of programming languages. This graph is so big that anyone who has ever written "Hello World" in anything has the urge to stick their nose against the wall and search section by section to try find their favorite languages. I certainly did. The next instinct is to trace the "influenced" edges of the graph with their index finger backwards in time. Or forwards, depending on how old the languages happen to be.

The graph also tells a story if you stand *way* back.

A title to the left reads…

"This chart shows about 150 of the thousands of programming languages that have been invented. Some are general-purpose, while others are designed for particular kinds of applications. Few new languages are truly new. The arrows show how newer ones might have been influenced by older ones "

… which makes it clear that even this complex picture is but a sample of a larger dynamic. The timeline of the above graph is from 1954 to 2000. For all the programming languages that existed at the time this graph was created, there are even *more* now. The software world just can't stop creating new programming languages.

The Good Old Days

There is so much that can be taken for granted in computing today. Back in the early days everything was expensive and limited: storage, memory, and processing power. People had to walk uphill and against the wind, both ways, just to get to the computer lab, and then stay up all night to get computer time. One thing that was easier during that time was that the programming language namespace was greenfield, and initial ones from the 1950's and 1960's had the luxury of being named precisely for the thing they did: FORTRAN (Formula Translator), COBOL (Common Business Oriented Language), BASIC (Beginner's All-purpose Symbolic Instruction Code), ALGOL (Algorithmic Language), LISP (List Processor). Most people probably haven't heard of SNOBOL (String Oriented and Symbolic Language, 1962), but one doesn't need many guesses to determine what it was trying to do. Had object-oriented programming concepts been more fully understood during that time, it's possible we would be coding in something like "OBJOL" —an unambiguously named object-oriented language, at least by naming patterns of the era.

It's worth noting and admiring the audacity of PL/I (1964), which was aiming to be that "one good programming language." The name says it all: Programming Language 1. There should be no need for 2, 3, or 4. Though PL/I's plans of becoming the Highlander of computer programming didn't play out like the designers intended, they were still pulling on a key thread in software: why so many languages? That question was already being asked as far back as the early 1960's.

The Here And Now

Scala (2003), Go (2009), Rust (2010), Kotlin (2011), and Swift (2014) are just a few examples of languages created since 2000. There are plenty more. In today's technical landscape, it seems there should already be something for just about every combination of these basic language attributes…

- License

- Open source (various license types), commercial

- Platform

- Operating system, hardware support

- Language Paradigm

- Procedural, functional, object oriented, etc.

- Typing System

- Dynamic, static, etc.

- Concurrency

- Single threaded, multi-threaded

- Memory Management

- Automatic garbage collection, manual

- Execution

- Interpreted, compiled to virtual machine, native compilation, etc.

- Other Language Features

- This is a huge potential list in terms of built-in datatypes and data structures, database and networking capabilities, and other functional capabilities and utilities.

… to serve every low-level, high-level, functional, procedural, object, single-threaded, multi-threaded, compiled, or scripting need on any platform. Given that, why are new languages still being created? The biggest theme I see is control.

Control and Fortune

Microsoft's main development language offerings in the mid-1990's were Visual Basic and Visual C++, both of which were derived from older nodes on the Computer History Museum language graph. Visual Basic was popular for building front-end applications for the Windows desktop platform, but lacked many advanced language features (e.g., data structures, threading). Visual C++ was at the other end of the spectrum—developers could do just about anything, with the catch being that C++ is complex. There was an opportunity for a "language in the middle" so to speak. Then Java burst on the scene in 1996. The fact that Java was a full-featured object-oriented language with fewer of C++'s sharp edges was compelling, and I even remember kicking the tires on Microsoft's Visual J++ when it first came out. Everybody was joining the Java party.

One of Java's major design priorities was platform portability. Unfortunately, that was not one of Microsoft's goals at least as far as non-Microsoft platforms went, and that put Sun Microsystems—the company behind Java at the time—and Microsoft on a collision course that resulted in lawsuits starting in 1997. The strain in the relationship eventually led to Microsoft releasing another language called C# (2002) that looked a whole lot like Java but wasn't. C# filled the "middle" spot in the Microsoft development stack, and was something that, unlike Java, Microsoft could control.

It's almost a strange testament to Java's widespread appeal that those weren't the only Java lawsuits. Oracle sued Google over its use of Java in the Android mobile platform in 2010, as Oracle became the owner of the Java language after it acquired Sun Microsystems. It was a decade-long legal battle that eventually reached the U.S. Supreme Court in 2021.

Total Design Control

Maintaining and evolving an existing system is challenging, and I've written multiple BLOG@CACM posts on this topic such as Log4j And The Thankless High Risk Task Of Managing Software Component Upgrades and The Art Of Speedy Systems Conversions. The paradox of software growth is that the greater adoption of a system leads to greater usage which can lead to greater success, and then to even greater adoption. But as adoption and usage increases, it can lead to the system becoming harder to change, especially in big ways, because such changes risk breaking backward compatibility. It's not impossible to manage, just hard.

Managing growth of a programming language is arguably one of the hardest of those hard cases. Programming language users are developers, and good developers are not only productive but also have a way of using language features in creative ways, not all of them necessarily intended by the authors. If there are edge-cases to be found, developers will find them, especially at scale. Go (2009) is an interesting example of wanting to do more and less. One driver of its creation was the need for something that would deploy efficiently and predictably in Google's containerized cloud environment. Another driver was the desire for a language that was powerful, especially with respect to networking and concurrency, but not too powerful with respect to language features as the authors were apparently also motivated by a "shared dislike" of C++'s complexity. Hypothetically, Google could have addressed the first driver by building a new compiler and run-time engine for an existing language that Google was already using. While not a simple task, Google has a lot of smart people so it's a possibility. But to change what developers are doing—and how they are doing it—requires changes to a programming language's syntax and capabilities, and those types of changes are hard—particularly when developers are being told that certain things aren't allowed anymore or must be done differently. Sometimes it can be easier to just create something new for the use-case at hand, at least if one has the resources, which Google does, and start over. Again. And another node gets added to the wall-sized graph of computer languages at the Computer History Museum.

Go was preceded by an unrelated but more emphatic language called Go! (with an apostrophe) in 2003, demonstrating that language namespace differentiation is becoming increasingly difficult. Why did Google still use that name despite the collision? I don't know. Go figure.

Special Thanks

Many thanks to Dag Spicer from the Computer History Museum for emailing me the PDF of the evolution of computer programming languages graph. Thank you!

References

Computer History Museum - https://computerhistory.org/

The Good Old Days

- Timeline of programming languages - https://en.wikipedia.org/wiki/Timeline_of_programming_languages

- PL/I (Can't blame them for trying!) - https://en.wikipedia.org/wiki/PL/I

- Highlander (film) – "There can be only one" - https://en.wikipedia.org/wiki/Highlander_(film)

The Here And Now

- Programming language paradigms - https://en.wikipedia.org/wiki/Comparison_of_multi-paradigm_programming_languages

Control

- Sun/Microsoft Java Lawsuits

- Visual J++ (This was briefly a thing) - https://en.wikipedia.org/wiki/Visual_J%2B%2B

- Oracle-Google Java Lawsuit - https://www.bbc.com/news/technology-56639088

- Go! (2003) - https://en.wikipedia.org/wiki/Go!_(programming_language)

- Go (2009) - https://en.wikipedia.org/wiki/Go_(programming_language)

Selected BLOG@CACM Posts

Log4j And The Thankless High Risk Task Of Managing Software Component Upgrades

The Art Of Speedy Systems Conversions

Doug Meil is a software architect in healthcare data management and analytics. He also founded the Cleveland Big Data Meetup in 2010. More of his ACM posts can be found at https://www.linkedin.com/pulse/publications-doug-meil

Comments

Paul McJones

Very nice article. Readers interested in learning about the history of FORTRAN, COBOL, BASIC, ALGOL, LISP, SNOBOL, PL/I, as well as APT, JOVIAL, GPSS, SIMULA (hint: it influenced Smalltalk and C++), JOSS, and APL should take a look at the first History of Programming Languages Conference -- available for free here: https://dl.acm.org/doi/book/10.1145/800025

Displaying 1 comment