Communications of the ACM

The Base-Rate Neglect Cognitive Bias in Data Science

We asked 98 students who were enrolled in either a data science or a machine learning course the following question, entitled the lion classification question:

A machine learning algorithm was trained to detect photos of lions. The algorithm does not err when detecting photos of lions, but 5% of photos of other animals (in which a lion does not appear) are detected as a photo of a lion. The algorithm was executed on a dataset with a lion-photo rate of 1:1000. If a photo was detected as a lion photo, what is the probability that it is indeed a photo of a lion?

(a) About 2%

(b) About 5%

(c) About 30%

(d) About 50%

(e) About 80%

(f) About 95%

(g) Not enough data is provided to answer the question

The results are presented in the following pie chart, in which green represents the correct answer and red represents wrong answers:

As can be seen, the majority of students answered the lion classification question incorrectly (61%); among them, the most common erroneous response was 95% (given by 32% of the participants).

The lion classification question is analogous to a problem proposed by Casscells et al. (1978). Casscells et al. asked 20 interns, 20 fourth-year medical students, and 20 attending physicians at four Harvard Medical School teaching hospitals the following question, known as the medical diagnosis problem:

If a test to detect a disease whose prevalence is 1/1000 has a false positive rate of 5%, what is the chance that a person found to have a positive result actually has the disease, assuming you know nothing about the person's symptoms or signs?

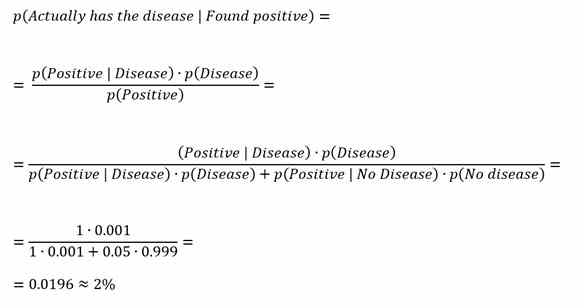

Using Bayes' Theorem (see Appendix), the correct answer to both the medical diagnosis problem and to the lion classification question can be calculated (and is 2%). Nevertheless, only 18% of the participants in Casscells et al.'s experiment gave the correct answer. Several explanations were suggested for this phenomenon (Koehler, 1996). The base-rate neglect fallacy, identified by Kahneman and Tversky (1973), is one of these explanations and it suggests that this mistake results from ignoring the base rate of the disease in the population. Another explanation was suggested by Leron and Hazzan (2009), who explained this error using the dual-process theory (Kahneman, 2002).

In the lion classification question, the students are asked to evaluate the probability that a given photo will indeed contain a lion if a machine learning algorithm detects the photo as a lion photo. In other words, they are asked to evaluate the true positive rate of the lion detector. The false positive rate, that is the probability that a given photo will not contain a lion even though it is detected as a lion photo, is given as 5% (as is the false positive rate in the medical diagnosis problem). Since the false negative rate (lion photos that are not detected) is given as 0, all lion photos will be detected. The question is, therefore, what will be the percentage of lion photos in the detected-as-lion-photos group. This percentage depends on the ratio of lion photos in the dataset, that is, the base rate of lion photos, which according to the base-rate neglect bias, humans tend to ignore. Similar to the medical diagnosis problem, the base rate of lions is given as 1:1000, so based on Bayes' Theorem, the true positive rate is about 2%.

As can be seen, the machine learning learners exhibited the same patterns found by Casscells et al. (1978): The majority ignored the base rate and answered the lion classification question incorrectly (61%) and among those, the most common erroneous response was 95% (given by 32% of the participants). While 18% of the participants in Casscells et al.'s experiment gave the correct answer, in our experiment, 39% answered this question correctly, more than double the rate found by Casscells et al.

There are two possible explanations for this difference. First, the question in our experiment was formulated as a multiple-choice question (rather than as an open question as in the case of Casscells et al.). This provided the participants with several optional answers to consider. Participants could also guess the correct answer if they so desired. The second explanation stems from the participants' answers to the open question they were asked immediately following the lion classification question, which requested them to explain their selected answer. More than half of the participants who answered the question correctly explained their answer by quoting Bayes' Theorem, which they used to calculate the answer precisely. It is, therefore, reasonable to assume that our population had more background in probability than did the population in Casscells et al.'s experiment.

Even though the percentage of correct answers in our experiment was more than double that of the original, medicine-related experiment, the majority of our participants, who were at the time taking either a data science or a machine learning course, failed to answer the question correctly. This phenomenon is meaningful and alarming, since the evaluation of a detector's prediction power is a crucial stage in the data science workflow, based on which decision may be made in real-life situations. (Think about terrorism detection, various medical treatments, and so on.) Therefore, while an ongoing effort is being made to improve machine learning algorithms and make them more explainable and interpretable, it is also the role of educators to improve human understanding of machine learning algorithms.

In our blog Mitigating the Base-Rate Neglect Cognitive Bias in Data Science Education, we suggest one way of doing just that.

References

Casscells, W., Schoenberger, A., and Graboys, T. B. (1978). Interpretation by physicians of clinical laboratory results. New England Journal of Medicine, 299(18), 999–1001. https://doi.org/10.1056/NEJM197811022991808

Kahneman, D. (2002). Maps of bounded rationality: A perspective on intuitive judgment and choice. Nobel prize lecture, december 8. Retrieved December, 21, 2007.

Kahneman, D. and Tversky, A. (1973). On the psychology of prediction. Psychological Review, 80(4), 237–251. https://doi.org/10.1037/h0034747

Koehler, J. J. (1996). The base rate fallacy reconsidered: Descriptive, normative, and methodological challenges. Behavioral and Brain Sciences, 19(1), 1–17. https://doi.org/10.1017/S0140525X00041157

Leron, U. and Hazzan, O. (2009). Intuitive vs analytical thinking: Four perspectives. Educational Studies in Mathematics, 71(3), 263–278.

Appendix – Solution of the medical diagnosis problem using Bayes' Theorem

We note that in the original phrasing of the question, the conditional probability of True-Positive is not given, and is assumed to be 1, meaning that the test is positive whenever the patient has the disease.

Koby Mike is a Ph.D. student at the Technion's Department of Education in Science and Technology under the supervision of Orit Hazzan; his research focuses on data science education. Orit Hazzan is a professor at the Technion's Department of Education in Science and Technology. Her research focuses on computer science, software engineering and data science education. For additional details, see https://orithazzan.net.technion.ac.il/.

No entries found