Communications of the ACM

New Research Vindicates Fodor and Pylyshyn: No Explainable AI Without 'Structured Semantics'

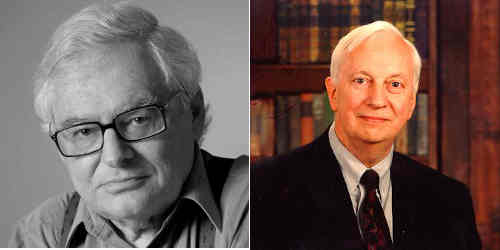

Credit: David Beyda Studio (Fodor), Rutgers School of Arts and Sciences

Center for Cognitive Science (Pylshyn).

Explainable AI (XAI)

As deep neural networks (DNNs) become employed in applications that make decisions on loan approvals, job applications, approving court bails, or making a life-or-death decision such as suddenly stopping a vehicle on a highway, explaining these decisions with little more than just producing a prediction score is of paramount importance.

Research in explainable artificial intelligence (XAI) has recently focused on the notion of counterfactual examples. The idea is intuitively simple: counterfactual inputs to produce some desired outputs are first crafted; reading off the hidden units would then, presumably, explain why the network produced some other output. More formally,

"Score p was returned because variables V had values (v1, v2, ...) associated with them. If V instead had values (v′1 , v′2 , ...), and all other variables had remained constant, score p′ would have been returned."

The following would be a specific example (see Wachter et al., available here):

"You were denied a loan because your annual income was £30,000. If your income had been £45,000, you would have been offered a loan."

An excellent paper (Browne and Swift, 2020 — henceforth B&W) has recently showed, however, that counterfactual examples are just slightly more meaningful adversarial examples, which are examples that are produced by performing small and unobservable perturbations on inputs causing the network to misclassify them with very high confidence (see this and this for more on adversarial attacks). Moreover, counterfactual examples ‘explain’ what some feature should have been to get the right prediction but "without opening the black box;" that is, without explaining how the algorithm works. B&W go on to argue that counterfactual examples do not provide a solution to explainability and that "there can be no explanation without semantics" (emphasis in original). In fact, B&W make the stronger suggestion that

- "we either find a way to extract the semantics presumed to exist in the hidden layers of the network," or

- "we concede defeat."

Our suggestion here will be to concede defeat (as we argued before here and here), since (1) cannot be achieved. Below, we explain why.

The Ghosts of Fodor & Pylyshyn

While we wholeheartedly agree with B&W that "there can be no explanation without semantics," we think their hope of somehow "producing satisfactory explanations for deep learning systems" by finding ways "to interpret the semantics of hidden layer representations in deep neural networks" cannot be achieved and precisely for reasons outlined over three decades ago in Fodor & Pylyshyn (1988).

Before we explain where the problem lies, we note here that purely extensional models such as neural networks cannot model systematicity and compositionality because they do not admit symbolic structures that have productive syntax and a corresponding semantics. As such, representations in NNs are not really "signs" that correspond to anything interpretable — but are distributed, correlative and continuous numeric values that on their own mean nothing that can be conceptually explained. Stated in yet simpler terms, the subsymbolic representation in a neural network does not on its own refer to anything conceptually understandable by humans (a hidden unit cannot on its own represent any object that is metaphysically meaningful). Instead, it is a collection of hidden units that together usually represent some salient feature (e.g., whiskers of a cat). But that is precisely why explainability in NNs cannot be achieved, namely because the composition of several hidden features is undecidable — once the composition is done (through some linear combination function), the individual units are lost (we’ll show that below).

In other words, explainability — which by the way was addressed very early on in AI and decades before deep learning — is essentially some form of "an inference in reverse" — it is a process of backtracking our steps to "justify" the final decision. But if compositionality in NNs is irreversible, then explainability can never be achieved. It is that simple!

Explainability is "Inference in Reverse" and DNNs Cannot Revert their Inference

In previous posts (links provided above), we discussed why Fodor and Pylyshyn concluded that NNs cannot model systematic (and thus explainable) inferences. In symbolic systems there are well-defined compositional semantic functions that compute the meaning of a compound as a function of the meanings of the constituents. But that composition is invertible — i.e., one can always get at the components that produced that output, and precisely because in symbolic systems one has access to the "syntactic structure" that has a map of how the components were assembled. None of this is true in NNs. Once vectors (tensors) are composed in a NN, their decomposition is undecidable (the number of ways a vector — including a scalar, can be decomposed is infinite!)

To show why this is the heart of the issue, let us consider the suggestion B&W made to extract semantics in DNNs so as to achieve Explainability. What B&W suggested is to have something along these lines:

The input image was labelled "building" because hidden neuron 41435, which generally activates for hubcaps, had an activation of 0.32. If hidden neuron 41435 had an activation of 0.87 the input image would have been labelled "car."

To see why this would not lead to explainability, it suffices to note that it is not enough to demand the activation of neuron 41435 be 0.87. Suppose, for simplicity, that neuron 41435 had only two inputs, x1 and x2. What we have now is shown in figure 1 below:

Figure 1. A single neuron with two inputs with the output of 0.87

Now suppose our activation function f is the popular ReLU function, then the output could be produced for z = 0.87. Which means one can get the output 0.87 for the values of x1, x2, w1, and w2 shown in the table below.

Looking at the table above, it is easy to see that there are an infinite number of linear combinations of x1, x2, w1, and w2 that would produce the output 0.87. The point here is that compositionality in NNs is not invertible, thus no meaningful semantics can be captured from any neuron nor from any collection of neurons. In keeping with B&W’s slogan that "there can be no explanation without semantics," we state that no explanation can ever be obtained from NNs. In short, no semantics without compositionality, and no explanation without semantics, and DNNs cannot model compositionality. This can be formalized as follows:

1. There can be no explanation without semantics (Browne and Swift, 2020)

2. There can be no semantics without invertible compositionality (Fodor & Pylyshyn, 1988)

3. Compositionality in DNNs is irreversible (Fodor & Pylyshyn, 1988)

=> There can be no explainability (no XAI) with DNNs

QED.

Incidentally, the fact that compositionality in DNNs is irreversible has other consequences than the inability to produce explainable predictions, especially in domains that require higher-level reasoning such as natural language understanding (NLU). In particular, such systems do cannot explain how a child learns how to interpret an infinite number of sentences just from a template such as (<human> <likes> <entity>), since "John", "the girl next door", "the boy that always comes here wearing an AC/DC t-shirt", etc. are all possible instantiations of <human>, and "classic rock", "fame", "Mary’s grandma", "running at the beach", etc. are all possible instantiations of the <entity>. Because such systems do not have ‘memories’ and because their composition cannot be inverted, they would need, in theory, an infinite number of examples to learn just this simple structure.

Finally, we would like to note that the criticism of NNs as a cognitive architecture that was raised over three decades ago by Fodor & Pylyshyn (1988)—where they show why NNs cannot model systematicity, productivity and compositionality, all of which are needed to speak of any "semantics"—is a convincing criticism that has never been answered. As the need to address the problem of explainability in artificial intelligence becomes critical, we must re-visit that classic treatise that shows the limitations of equating statistical pattern recognition with advances in AI.

Walid Saba is Senior Research Scientist at the Institute for Experiential AI at Northeastern University. He has published over 45 articles on AI and NLP, including an award-winning paper at KI-2008.

Comments

Arvind Punj

Great article, have been trying to get explanations for vectors generated by Universal Sentence Encoder and have found what is being discussed here to be true however it is also true that doing experiments in the input itself yields some explanations of how the neural net task is working and often this is enough to conclude things like this one experiment on what kind of document (document classification using imaging) had yielded that removing certain pixels classified the document as invoice versus Bill of lading. Can you comment if this then becomes part of the Semantics which can be discovered that you talked about my email is [email protected], will be glad to hear your view.

Displaying 1 comment