Communications of the ACM

Is Computing Innovation Getting Harder?

Originally presented in the Distant Whispers blog.

The angst, or perhaps irritation, that we feel at times about the rate of innovation was captured pithily by Peter Thiel, "We wanted flying cars, instead we got 140 characters." I am going to confine my thoughts to the world I know best, innovations in the field of computing. Many of us, inside the ivory tower and outside, have felt at times that research is not quite delivering at the rate we hope it would. Or rather the rate at which we think anecdotally that research delivered goodies in the golden past. By the golden past, let me cast my net out about 100 years back because some monumental innovations from within that time window are experienced by us on a regular basis even today. A cursory inspection turns up gems like the wireless radio, the transistors that miniaturized those radios, and air travel.

There is merit in the argument, fueled as it is by documentaries reminiscing in sepia-toned hues the grand leaps of science and technology in the past. There is also the pure power of math to bolster this argument — if you are at 1 and you grow to 2, an absolute growth of 1, you have just grown by 100%. Now if you are at 100 and you grow by that same absolute amount, you have grown by a puny 1%. That variable here is the base of technological goodies that we are building on.

I believe there is some merit in the collective angst that society feels at the amounts of money being poured into research, which is often sensationalized in the popular press. And the outcomes are not tangible in the short attention span that we have. If a $10-million dollar project got green lighted three years back, how come I see no tangible benefit of it anywhere around me. I will like to make the argument that our innovation engine is working on overdrive and the fact that the progress seems Sisyphean at times is partly an optical illusion and partly a natural law of progression. My argument is built around two constituent arguments.

- In terms of quantitative measures, our innovation has sped up

- The discoveries coming out of our innovation are at a higher level of our needs pyramid and therefore less existentially felt

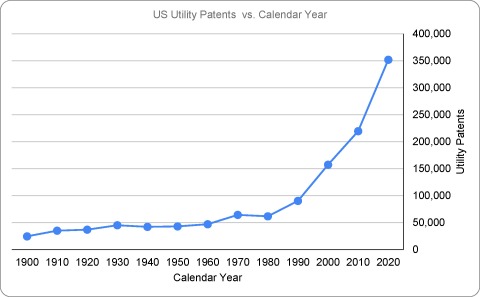

First, consider the quantitative metrics that we use to measure innovation — number of patents, number of publications, and number of journals in a field of study. These have all been climbing up in the last 50 years.

Trend of U.S. utility patents since 1900. Source of data: US Patent and Trademark Office

And today we are still capable of putting together the technical might of hundreds of scientists and technologists from across the globe to accomplish large projects, like the Large Hadron Collider or the massive AI engines that are figuring out how to go from any place to any other place on the globe. And it is worthwhile to remember that some of the innovations from the recent past enabled us to coordinate the efforts of so many.

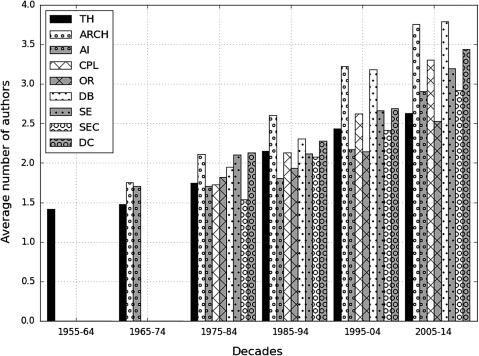

On a less grand scale, research has become much more of a team enterprise now. And that is both a sensible maturation of our technology landscape and a desirable progression. The first indicates that we are tackling harder problems and these need expertise of multiple researchers, often from different, perhaps closely related, technical areas. The second arises from my observation that this leads to both greater fulfillment for researchers and faster progress, once we have conquered the Tower of Babel syndrome.

Evolution in the average number of authors for the oldest Computer Science areas.

Source: Fernandes, João M., and Miguel P. Monteiro. "Evolution in the number of authors of computer science publications." Scientometrics 110, no. 2 (2017): 529-539.

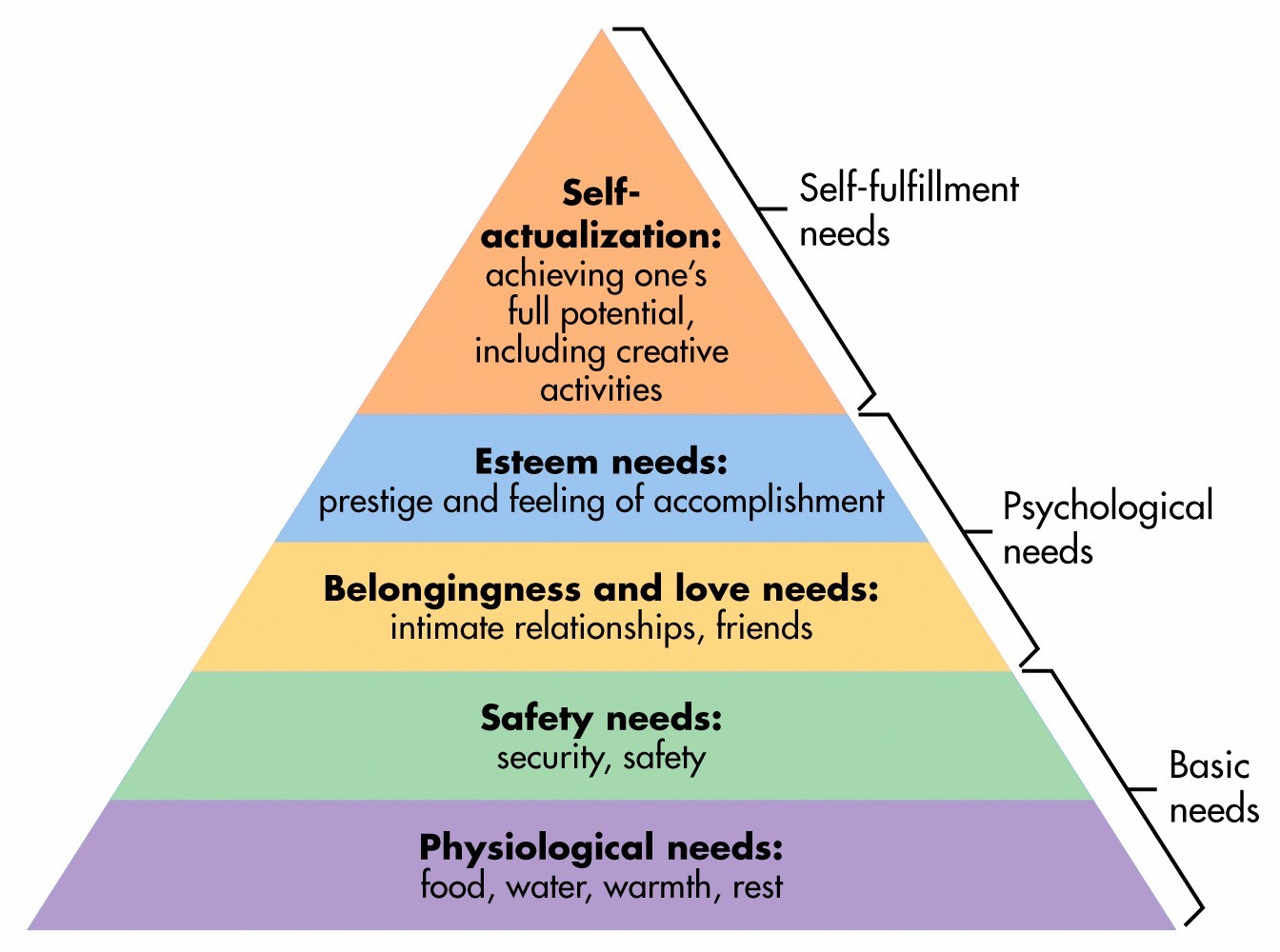

Second, our earlier technological progresses were moving us from a very low base and so the effects were indeed of high impact. This is the classic economic principle of diminishing returns. When we have no computer program to help us write our documents, and one comes along, the effect seems profound. But when that software "just" becomes better at correcting typographical errors, then we feel less impressed. This view is eloquently put forth by Nicholas Carr in "Utopia is creepy" where he draws an analogy to the Maslow hierarchy.

Maslow's needs hierarchy.

In my small arc of computing progresses, I find myself falling into this trap too — the first distributed system that resolved all conflicts with multiple users felt so earth shattering to me. And then the subsequent systems that showed how to do this at large scales, even global scales, somehow paled behind the early ones. But when I think more aboout this, I realize that technology has this relentless arc of progress. We get more sophisticated technology, technology that makes us more productive, safer, and saner.

So, to sum up, it does appear at times that all this funding into research and development of technology is not leading to fruits fast enough. There are definitely unproductive lines of research and development, ones where federal agencies have sunk much money into a bottomless hole. But I believe there are enough fruits coming out of the work to justify that we press on the gas on our innovation pipeline, not pull back on it. Sure, we will need to channelize the innovation engine better in some parts so that the broad society can benefit from it more. This will need careful shepherding by the technology leaders and policy makers and concerted pulling by us technologists. But the innovation engine is broadly healthy here in the U.S. and we should put in our best creative abilities to amplify it.

Saurabh Bagchi is a professor of Electrical and Computer Engineering and Computer Science at Purdue University, where he leads a university-wide center on resilience called CRISP. His research interests are in distributed systems and dependable computing, while he and his group have the most fun making and breaking large-scale usable software systems for the greater good.

No entries found