Communications of the ACM

VOT Challenge: Computer Vision Competition

Currently, machine learning methods are undergoing explosive development. New ideas and solutions never stop appearing, which in many areas have already turned the rules of the game upside down so neural networks can outperform humans. For example, graphical neural networks, if not yet superseded by artists, took over at least part of their routine work, especially in the computer games industry. ChatGPT is a threat to copywriters and many other professions, from lawyers to teachers.

Significant impact on the rapid growth in the field of machine learning is provided by various competitions that are held in different areas of knowledge. Often, competition winners receive a significant cash prize, but it is not uncommon that no financial support is implied. For a team, participation in such competitions can be just an opportunity to gain new knowledge, gain exposure, and obtain access to a good dataset in this or that area.

Machine learning competitions

So, where do these competitions take place? Everyone knows about competitions on the multi-topic platform kaggle.com, but there are others. Offhand, we can name trustii.io, codalab, bitgrit.net, and eval.ai. Moreover, a number of platforms have their own specialization. For example, aicrowd.com and drivendata.org position themselves as machine learning competitions with social impact, while xeek.ai is dedicated to geoscience, and miccai.org to medical images computing, CrunchDAO.com looks into financial markets, and zindi.africa is a competition related to the African continent. Some competitions are held within the framework of scientific conferences, such as NeurIPS. There are even sites like mlcontests.com that bring together the most interesting competitions across platforms.

VOT Challenge

In this blog, we will introduce you to one not-very-well-known (but quite interesting) ML competition. If you are doing research in the field of computer vision, you have probably come across VOTXXXX Benchmarks. These are the results of The Visual Object Tracking Challenge (VOT Challenge; votchallenge.net).

This challenge takes place in the spring and summer, and is related to the development of algorithms for tracking objects in videos. Its strong side is that it provides a standardized framework for evaluating and comparing tracking algorithms by providing a dataset of annotated videos with annotations. A ready-made library and test case preparations adapted for Python and Matlab are available. Also, there is quite detailed documentation; examples of trackers from previous competitions are available, and the organizers actively communicate with the community through forums and mailing lists.

At the same time, the challenge is aimed at enthusiasts: there is no prize value except for the opportunity to become a co-author or be mentioned in the annual publication based on the results of the competition.

Back to the past

Now, just a few words about the history of the competition and its specific features.

2013: The idea of the competition emerged from the absence of standard evaluation for visual object trackers. Evaluation methodology for visual object tracking was strongly needed. For this, a small annotated dataset and a dedicated evaluation toolkit were created, and the latter was developed specifically for the challenge, which was held as part of the ICCV2013 conference.

2014: The evaluation toolkit obtained the TraX integration protocol that offered more freedom for integration and faster execution trackers. The dataset was expanded.

2015: New evaluation measures were added: robustness and accuracy. The dataset was increased even more, and the thermal-image-based tracking sub-challenge was announced.

2016-2019: The challenge developed, the annotation procedure modified, new sub-challenges appeared, including those dealing with long-term tracking and multi-modal sequences. Datasets continued to develop, and among them, there were datasets based on thermal images and RGB+depth images.

2020-2021: The evaluation toolkit was finally implemented in Python, opening up the possibility for new teams to participate. Also, the organizers were engaged in the complication of datasets, removing and replacing simple sequences of images.

2022: The VOT challenge was characterized by a very large number of specialized sub-challenges. The emphasis was placed on different variations of short-term trackers and one long-term tracker. Localization task that used segmentation mask, and not just bounding box was developed.

This year, the organizers have dramatically changed the rules of the game, which affected the results of many participants. Only one task was proposed for the competition which, in fact, covered all the subtasks that were provided previously: it was necessary to predict long-term tracks on RGB video for arbitrarily given objects. The object was marked with a mask only on the first frame of the video.

The dataset has also been completely renovated. In the validation set, four videos and nine objects for tracking were available with full markup, and the test set contained 144 videos and a total of more than 200,000 frames.

The videos were quite diverse; they differed in the following features:

- Different resolutions, from 180 х 320 to 1920 х 1080. Of these, 94 videos had a resolution of 1280 х 720.

- Multiple objects to track on video: from 1 to 8.

- A lot of long videos. Average number of frames per video: 2073; maximum number of frames: 10,699 (that is over seven minutes).

- Some videos had a lot of very similar objects (zebras, balls, etc.) that needed to be tracked independently.

- There were many dynamic scenes with active camera movement.

- Very often, there were scenarios when an object disappeared for rather a long time and later reappeared.

- There were some videos with a very small object to track.

- Judging by the validation videos, the ground truth masks did not accurately describe the tracking object.

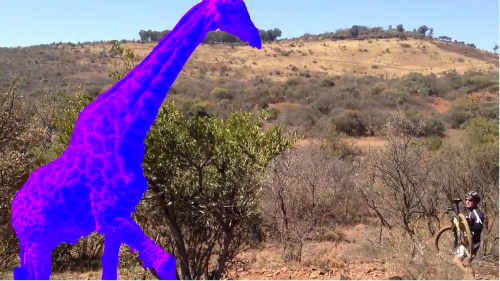

Example: first frames in four validation videos with one of the objects for tracking (the target object is marked in purple):

The organizers suggested using a specialized metric, which is very different from generally accepted ones. It is called Quality, and it is the basis for LeaderBoard calculations. There are a few more additional metrics: accuracy, robustness, etc. The organizers of the challenge described the metrics in detail in the following paper: https://data.votchallenge.net/vots2023/measures.pdf

Our Solution

We have followed this challenge from the very beginning, however we could not pay enough attention to it every year because it takes place at the "hottest" session time for university teachers. Nevertheless, we analyzed successful cases and tasks from the competition and used them to train students and conduct our local competitions.

This year we set up a team and resolved to take part in the competition in a big way. Firstly, we agreed on the following strategy: we won't use any ready solutions from previous contests; quite the opposite, we should try to apply novel tools that were developed recently.

We took the new Facebook network "Segment Anything" [Kirillov A. et al. Segment anything //arXiv preprint arXiv:2304.02643. 2023, https://github.com/facebookresearch/segment-anything] for intellectual segmentation of the initial image. After creating a partition for comparison with the original object for tracking, we used neural network Open CLIP [Radford A. et al. Learning transferable visual models from natural language supervision. International conference on machine learning. PMLR, 2021. Pp. 8748-8763, https://github.com/mlfoundations/open_clip]. This network performed well in the competition Google Universal Image Embedding [https://www.kaggle.com/competitions/google-universal-image-embedding] on the Kaggle platform without additional training. Open CLIP allows user to get Embedding for each part of the segmented image and Embedding for the desired objects. Then, after finding pairwise cosine similarity between parts of the image, one can find the object with the maximum metric and select it as part of the track.

In addition, we tested another network, Dinov2 [Oquab M. et al. Dinov2: Learning robust visual features without supervision. arXiv preprint arXiv:2304.07193. 2023, https://github.com/facebookresearch/dinov2], which should also give good Embedding for object comparison. The result is similar to OpenCLIP, but slightly worse.

Our solution is available here: https://github.com/ZFTurbo/VOTS2023-Challenge-Tracker

Results

The code provided by organizers allows users to measure metrics locally for validation videos. To check metrics of test videos, the appropriate results should be uploaded to the server.

Validation results

|

Tracker |

Quality |

Accuracy |

Robustness |

NRE |

DRE |

ADQ |

|

SegmentAnything and CLIP |

0.332 |

0.671 |

0.510 |

0.002 |

0.488 |

0.000 |

|

SegmentAnything and Dinov2 |

0.326 |

0.663 |

0.520 |

0.002 |

0.478 |

0.000 |

Test results

|

Tracker |

Quality |

Accuracy |

Robustness |

NRE |

DRE |

ADQ |

|

SegmentAnything and CLIP |

0.25 |

0.66 |

0.37 |

0.01 |

0.62 |

0.00 |

Known issues

The main idea of our tracker is that our solution is a Zero-shot predictor because we are using neural networks not trained for the tracking task. Thus, we save time and expensive computing resources for training the tracker. This is especially important in the presence of very serious restrictions on the datasets used that were announced by the organizers.

Due to the short duration of the competition, we did not have time to do everything we wanted. In the current implementation, we select the next object simply by the maximum cosine similarity metric. But with this approach, we do not solve two problems:

1. In case there are very similar objects in the image, we will jump between them.

2. In case the object is out of sight, we still choose a random object.

Results

Based on the experience of previous competitions, we proceeded from the postulate that the task is to mark the tracked object as accurately as possible. We considered that the disappearance from visibility is short-term and can be neglected. This turned out to be a serious mistake, as the new Quality metric is built in such a way that frames with no object are taken into account with high weight, and the tracker did not recognize such cases. Also, the test dataset itself contained a significant number of sequences where there was no object for tracking for a long time.

Consequently, it was enough for a simple baseline tracker built on bounding boxes (which took into account peculiarities of the metric and test dataset) to show better results than our tracker. Of course, it is frustrating that the organizers did not inform the community about the Quality threshold in advance, especially when they abruptly changed the approach to evaluation and suggested that everyone do only long-term tracking, although earlier the emphasis was on short-term. But on the other hand, it was not only our team that fell into this trap, since our result still appeared on the leaderboard, where only 40% of the best participants of the competition are placed.

Future work

To significantly improve the results of our tracker, an additional neural network based on recurrent LSTM layers is required. It will receive data from N previous frames as input:

Embedding, x, y, area are defined for the current frame for each object. The neural network predicts whether an object is a tracked element. If probability is low for all objects, we propose that the object has gone off the screen.

We can do training on available tracking datasets with known markup (segmentation). Thus, we will be able to use the most advanced ready-made ML models in pre-trained form, and we will train only this additional mesh.

Conclusion

Despite not the best result obtained this year, we are satisfied with our participation in VOT Challenge. It confirmed the reputation of an excellent computer vision competition due to interesting tasks, good documentation, and convenient tools for starting. We recommend all specialists in computer vision and academic teams bear VOT Challenge in mind.

Aleksandr Romanov ([email protected]) is an associate professor and head of the CAD Laboratory of HSE University, Moscow, Russia. Roman Solovyev ([email protected]) is a chief researcher in IPPM RAS, Moscow, Russia.

No entries found