Communications of the ACM

What If Generative AI Turned Out to be a Dud?

Originally posted on Marcus on AI

With the possible exception of the quick to rise and quick to fall alleged room-temperature superconductor LK-99, few things I have ever seen have been more hyped than generative AI. Valuations for many companies are in the billions, coverage in the news is literally constant; it's all anyone can talk about from Silicon Valley to Washington DC to Geneva.

But, to begin with, the revenue isn't there yet, and might never come. The valuations anticipate trillion dollar markets, but the actual current revenues from generative AI are rumored to be in the hundreds of millions. Those revenues genuinely could grow by 1000x, but that's mighty speculative. We shouldn't simply assume it.

Most of the revenue so far seems to derive from two sources, writing semi-automatic code (programmers love using generative tools as assistants) and writing text. I think coders will remain happy with generative AI assistance; its autocomplete nature is fabulous for their line of work, and they have the training to detect and fix the not-infrequent errors. And undergrads will continue to use generative AI, but their pockets aren't deep (most likely they will turn to open source competitors).

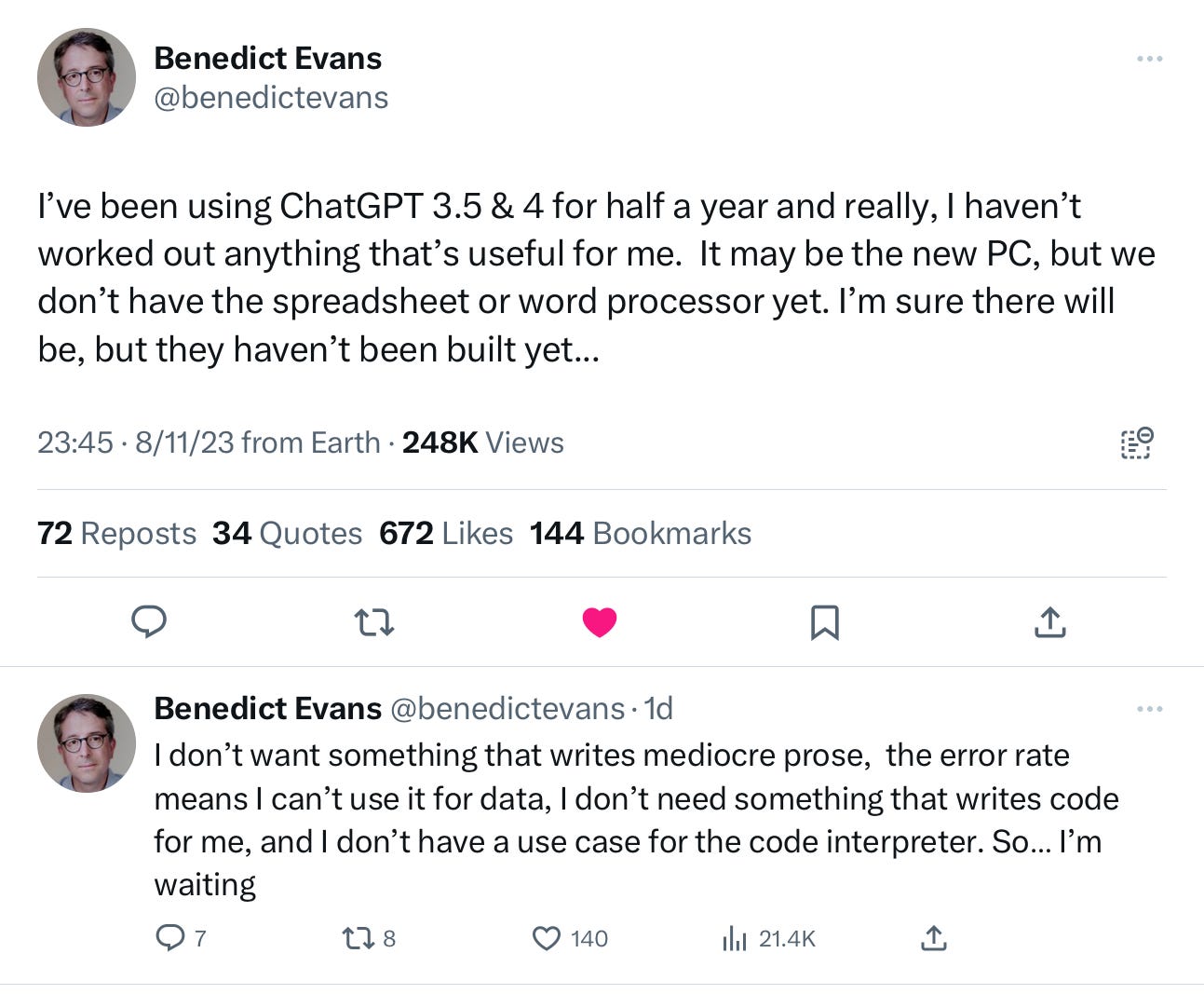

Other potential paying customers may lose heart quickly. This morning the influential venture capitalist Benedict Evans raised this in a series of posts on X (formerly known as Twitter):

My friends who tried to use ChatGPT to answer search queries to help with academic research have faced similar disillusionment. A lawyer who used ChatGPT for legal research was excoriated by a judge, and basically had to promise, in writing, never to do so again in an unsupervised way. A few weeks ago, a news report suggested that GPT use might be falling off.

If Evans' experience is a canary in a coal mine, the whole generative AI field, at least at current valuations, could come to a fairly swift end. Coders would continue to use it, and marketers who have to write a lot of copy to promote their products in order to increase search engine rankings would, too. But neither coding nor high-speed, mediocre quality copy-writing are remotely enough to maintain current valuation dreams.

Even OpenAI could have a hard time following through on its $29 billion valuation; competing startups valued in the low billions might well eventually collapse, if year after year they manage only tens or hundreds of millions in revenue. Microsoft, up for the year by nearly half, perhaps largely on the promise of generative AI, might see a stock slump; NVIDIA skyrocketing even more, might also fall.

Roger McNamee, an early pioneer in venture investing in software, and I were discussing this the other day. Perhaps the only use case that really seemed compelling to either of us economically was search (e.g., using Bing powered by ChatGPT instead of Google Search) — but the technical problems there are immense; there is no reason to think that the hallucination problem will be solved soon. If it isn't, the bubble could easily burst.

§

But what has me worried right now is not just the possibility that the whole generative AI economy—still based more on promise than actual commercial use—could see a massive, gut-wrenching correction, but that we are building our entire global and national policy on the premise that generative AI will be world-changing in ways that may in hindsight turn out to have been unrealistic.

On the global front, the Biden administration has both limited access to high-end hardware chips that are (currently) essential for generative AI, and limited investment in China; China's not exactly being warm towards global cooperation either. Tensions are extremely high, and a lot of it to revolve around dreams about who might "win the AI war."

But what if it the winner was nobody, at least not any time soon?

On the national front, regulation that might protect consumers (e.g., around privacy, reducing bias, demanding transparency in data, combatting misinformation, etc), is being slowed by a countervailing pressure to make sure that US generative AI develops as quickly as possible. We might not get the consumer protections we need, because we are trying to foster something that may not grow as expected.

I am not saying anyone's particular policies are wrong, but if the premise that generative AI is going to be bigger than fire and electricity turns out to be mistaken, or at least doesn't bear out in the next decade, it's certainly possible that we could wind up with what in hindsight is a lot of needless extra tension with China, possibly even a war in Taiwan, over a mirage, along with a social-media level fiasco in which consumers are exploited in news, and misinformation rules the day because governments were afraid to clamp down hard enough. It's hard to put odds on any of this, but it's a sobering thought, and one that I hope will get some consideration both in Washington and Beijing.

§

In my mind, the fundamental error that almost everyone is making is in believing that Generative AI is tantamount to AGI (general purpose artficial intelligence, as smart and resourceful as humans if not more so).

Everybody in industry would probably like you to believe that AGI is imminent. It stokes their narrative of inevitability, and it drives their stock prices and startup valuations. Dario Amodei, CEO of Anthropic, recently projected that we will have AGI in 2-3 years. Demis Hassabis, CEO of Google DeepMind has also made projections of near-term AGI.

I seriously doubt it. We have not one, but many, serious, unsolved problems at the core of generative AI — ranging from their tendency to confabulate (hallucinate) false information, to their inability to reliably interface with external tools like Wolfram Alpha, to the instability from month to month (which makes them poor candidates for engineering use in larger systems).

And, reality check, we have no concrete reason, other than sheer technoptimism, for thinking that solutions to any of these problems is imminent. "Scaling" systems by making them larger has helped in some ways, but not others; we still really cannot guarantee that any given system will be honest, harmless, or helpful, rather than sycophantic, dishonest, toxic or biased. And AI researchers have been working on these problems for years. It's foolish to imagine that such challenging problems will all suddenly be solved. I've been griping about hallucination errors for 22 years; people keep promising the solution is nigh, and it never happens. The technology we have now is built on autocompletion, not factuality.

For a while this concern fell on deaf ears; but some tech leaders finally seem to be getting the message. Witness this story in Fortune just a few days ago:

If hallucinations aren't fixable, generative AI probably isn't going to make a trillion dollars a year. And if it probably isn't going to make a trillion dollars a year, it probably isn't going to have the impact people seem to be expecting. And if it isn't going to have that impact, maybe we should not be building our world around the premise that it is.

Gary Marcus, well known for his recent testimony on AI oversight at the U.S. Senate, recently co-founded the Center for the Advancement of Trustworthy AI, and in 2019 wrote a book with Ernest Davis about getting to a thriving world with AI we can trust. He still desperately hopes we can get there.

No entries found