Communications of the ACM

UIST 2010: Papers of the First Day

I began my day by getting lost in New York, because it is indeed a good city to get lost in. I followed google map to the train station which does not really exist, but fortunately I memorized the walking path, so I decided to walk to the Judson Memorial Church, where the symposium would take place. It turned out to be a nice walk: I went past a beautiful park and a crowded street full of people willing to help me find my directions, put my umbrella to full use in the windy rain of Manhattan, and was beeped by taxi drivers whose temper matched their Boston counterparts - it was all a lot of fun.

The conference turned out to be marvelous, and the papers presented today are of great quality.

One paper presentation that drew my attention is "Pen + Touch = New Tools" by Hinckley et al. from Microsoft Research. Hinckley explained why the "pen" is still needed while almost every single user interface nowadays employs "hand-touching" as input: when you draw a diagram, mark a paragraph, or write down your thinking process, pen is way better than touch. The system that Hinckley presented makes use of both the touch and the pen instead, motivated by scenarios where both a hand and a pen (or a knife and the like) are needed.

Another short paper that interested me was “MAI Painting Brush: An Interactive Device That Realizes the Feeling of Real Painting” presented by Otsuki from Ritsumeikan University. With a Head Mounted Display (HMD) and a special brush (MAI Painting Brush), you can grab your favorite object in real life and paint on it without worrying about messing up its surface, because whatever you paint onto it exists only in the virtual world, and only someone wearing an HMD will be able to see it.

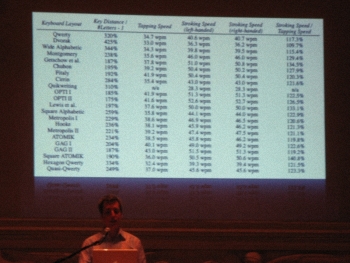

The other presentation that really impressed me was “Performance Optimizations of Virtual Keyboards for Stroke-Based Text Entry on a Touch-Based Tabletop”, by Jochen Rick from (previously) The Open University. Based on previous work of Shape Writing (a technique that allows users to use a single stroke to input each word) and empirical data from existing keyboard layouts, Rick created a new model of stroking, and evaluated the existing keyboard layouts using his algorithm. From the picture above you should be able to see the astounding amount of data that Rick has collected.

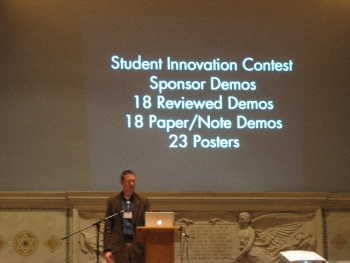

Today’s symposium ended with a demo and poster session, which you can find out more about from Jessica’s post coming soon.

I’d like to conclude this post with a quote of the day from one of the presentations:

“I have an iPad, but sorry Steve Jobs, it’s not good for typing.”

About the author:

Langxuan "James" Yin is a Ph.D. candidate at the College of Computer and Information Science at Northeastern University. James' research is focused on creating conversational virtual characters tailored to cultural minorities that improve the target population's health and promotes their health behaviors. He is also an active webcomic artist.

No entries found