Communications of the ACM

Anomaly Detectors Catch Zero-Day Hackers

Anomaly detectors now can alert users to zero-day computer incursions the first time they are used.

Credit: Shutterstock

Anomaly detectors are not intended to replace traditional pattern- or "signature-" based malware detectors, but they can do one thing that malware scanners cannot: they can sense zero-day computer incursions, those that have never before been used, but now can be detected the first time they are.

Anomaly detectors work faster than signature scanners, because the latter only work after an intrusion method has been identified—at least a day later. Anomalies, on the other hand, are spotted before the malware has even been identified, allowing data access to be cut off immediately. Only after data has been made inaccessible do the security analysts go in and identify the intrusion signature and patch the security hole, then add the malware's signature to the database from which its traditional pattern scanners are already scanning. Thus anomaly detectors prevent any access to data by hackers, rather than giving them days, weeks, or even months to be detected—long after massive amounts of otherwise-secure data may already have been stolen.

The problem is that computers are general-purpose devices, so false positives plague many anomaly intrusion detectors, just because a program is run in a slightly different way than it is normally run. For instance, some anomaly detectors actually monitor the power used by the system and flag power surges indicating massive data transfers. To avert false positives, the rules--called heuristics--have to be carefully crafted so as to detect any type of misuse without falsely misidentifying as intrusions, for instance, valid albeit extra-long data transfers being made by a program.

"Anomaly-based detection has the potential to detect novel or zero-day attacks," said computer security expert Somesh Jha, a professor in the computer sciences department of the University of Wisconsin (Madison, WI) who has reviewed, but has not been involved in developing, the analogy detectors described in this article. "Note that signature/pattern based techniques can only detect exploits that correspond to a pattern in their database. The challenge in anomaly-based detection is obviously to balance false positives, which has been a huge challenge."

Two basic approaches have been relatively successful. The first uses neural networks modeled on the brain to learn how to recognize a program's proper use, by letting it monitor and learn all the normal activities of the program. After the learning phase, the neural network can immediately detect unusual behaviors in the program.

The second way is to manually construct a mathematical model that strictly identifies normal usage of the computer system and flags as anomalies any variation from the model as a possible intrusion.

The problem with both types of these anomaly intrusion detectors is that a clever attacker who knows such detectors are being used could craft an intrusion program that never performs tasks too deviant from normal procedures.

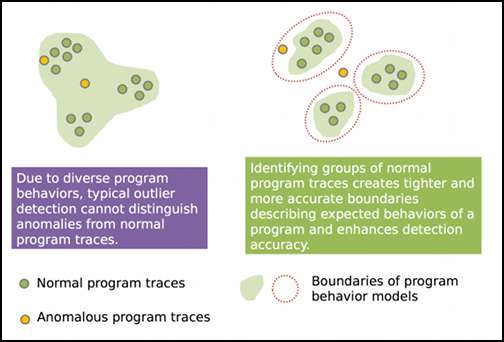

Due to the diverse behaviors of the typical program, outlier detection cannot

isolate attacks (left) but by clustering behaviors, attacks can be identified

more easily (right).

Danfeng (Daphne) Yao and collaborator Naren Ramakrishnan, both professors at Virginia Polytechnic Institute and State University, Blacksburg, VA (Virginia Tech), think they have devised a technique by which any program can be protected from even the slyest hacker "by observing a program's execution traces and/or analyzing executables." Yao explained, "In our work entitled "Unearthing Stealthy Program Attacks Buried in Extremely Long Execution Paths" presented at the ACM Conference on Computer and Communications Security [CCS 2015, held in October in Denver], we constructed such a behavioral model through data mining and learning methods on function- and system-call traces."

According to Yao and Ramakrishnan, the biggest advantage of their technique is that it detects new types of attacks before they are successful, rather than watching for the signature of previously successful attacks, as is a standard anti-virus technique.

"The capability of detection is not limited to recognizing known attacks. For hackers to accomplish their attack goals, they inevitably need to alter aspects of the program execution. One needs to compare the observed runtime execution traces with the behavioral models inferred about the program," said Yao.

If you measure success by accuracy (how well the technique can identify attacks) and efficiency (measured by the number of false-negatives and -positives), anomaly detection comes out on top, but the researchers do not advise decommissioning existing "virus scans," since they will produce fewer false-positives and -negatives for known threats. (False negatives are the least desirable, of course, because that means hackers got in and probably achieved their goals. False positives, however, consume manpower as analysts have to perform manual inspection steps to identify the actions as valid.)

Yao said program anomaly detection offers "flexibility in enforcing dynamic program behaviors, thus providing necessary complementary security protection against zero-day [first time] exploits, as well as advanced persistent threats [such as hackers installing monitoring software that hides inside the victim's computer and reports back to the hacker periodically]. Like all other security solutions, program anomaly detection is not perfect. The success of the detection is with respect to an attack model, which describes the adversary's capabilities."

History

Dorothy Denning wrote the seminal paper on the use of anomaly detection to detect computer intrusions, "An Intrusion Detection Model," in 1987. Since then, dozens of research teams have suggested ways to implement her ideas, but most have focused on immature hacker attempts that change the control flow and/or data flow of the program being derailed. The technique developed by Yao, Ramakrishnan, and doctoral candidate Xiaokui Shu, on the other hand, assumes the attack comes from a sophisticated hacker, or team of hackers, and does not interrupt control- or data-flows or make unknown calls or even alter call arguments.

"One may wonder what hackers may accomplish under this restricted attack model. Surprisingly, many real-world attacks belong to this category, including service abuse attacks, denial-of-service attacks, memory exploits such as heap feng shui, and workflow violations that, for example, bypass access control," Yao said.

The method developed by Yao and colleagues works by characterizing the numerical and semantic properties of call events, including the correlation patterns within long call sequences, the intervals between calls, and the call frequencies. That path was long and hard, they said, because modern programs have a diverse set of "normal" behaviors, making it difficult to recognize all of them without letting false negatives through.

"Straightforward application of novelty detection or outlier detection techniques such as 1-class SVM [support vector machines] results in unacceptably high false positives. For some programs, we found that normal call sequences are more different from each other than from anomalous traces! In addition, long traces may consist of tens of thousands of system calls."

To solve the puzzle of how to efficiently represent all these diverse cases during both learning and detection phases, the researchers decided to categorize behaviors into clusters which their detector could then recognize immediately, even though other clusters represented many other diverse types of normal execution patterns.

"Anomaly detection is performed within the boundary of the cluster. We found that this two-stage detection technique brings orders of magnitude reduction in false positive rates. In addition, we designed Boolean-matrix- and integer-matrix-based data structures to compactly represent call correlation and frequency properties," Yao said. "Our experimental evaluation showed that our prototype is effective against three types of real-world attacks and four categories of synthetic anomalies with less than 0.01% false positive rates and 0.1-to-1.3 millisecond analysis overhead per behavior instance" of 1,000 to 50,000 function calls or system calls.

Next, Yao plans to collaborate with electrical- and computer-engineers to secure Internet of Things devices, smart cities, and other smart infrastructures with lightweight, tamper-resistant program-anomaly detection systems.

Ramakrishnan wants to develop data analytics approaches to "not just detect cyber-attacks, but also to forecast them." He believes there are many signals "in the wild" that precede attacks and could be used to forecast them.

R. Colin Johnson is a Kyoto Prize Fellow who has worked as a technology journalist for two decades.

No entries found