Communications of the ACM

Smart Phone Tricks

Researchers are unveiling new ways to interact with smartphone touch screens.

Have you ever wished you had more fingers to operate some of your favorite smartphone apps? Or find that your digits lack the dexterity to reach some of the app buttons, especially when using your device one-handed? Maybe you're just sick of getting lost in nested on-screen app menus, or perhaps you feel you use your phone far too much and need a way out?

Well, it is springtime, and that means it is the season for the annual technology conferences that cover intelligent user interfaces, mobile computing, and human-computer interaction. After plowing through many of the papers that researchers have presented, or plan to present soon, I have identified an array of ideas that answer the aforementioned issues, which could come to smartphones, tablets, or wearables in the not-too-distant future.

Perhaps the most ambitious idea of the bunch was presented at the ACM Intelligent User Interfaces conference (IUI2019) in Marina Del Ray, CA, in mid-March. Niels Henze of the University of Regensburg, and his colleagues Huy Viet Le and Sven Mayer of the University of Stuttgart, have investigated the use of a deep learning convolutional neural network (CNN) to identify which finger or thumb a user is pressing against a touchscreen.

The problem the trio are addressing is that all phones "see" our fingers and thumbs as the same digit. However, if some digits were individually recognizable, an index-finger touch could provide one function, while a pinkie tap could activate another, and a thumb press still another, giving phone app buttons the ability to provide secondary and even tertiary functions, like right-mouse-button-clicking on a PC desktop and then navigating to a contextual menu.

Yet due to the low resolution of capacitive fingerprint "images," the team found their CNN could most accurately distinguish the left and right thumbs from each other and the user's index finger; good enough for some multi-digit tasks. "Differentiating between two thumbs can be used in a similar way to two buttons on a hardware mouse," say the researchers, suggesting this would be particularly useful in navigating three-dimensional (3D) applications, with one thumb rotating and the other moving into or out of a space. The researchers are now releasing their learning dataset of nearly 500,000 capacitive fingerprint images so other groups can attempt to produce what they call "thumb-aware" apps that people can use faster.

Why swipe when you can pinch?

Yet another way to speed smartphone operation will be revealed at the ACM Conference on Human Factors in Computing Systems (CHI2019) in Glasgow, U.K., in early May. Called PinchList, this particular user interface trick offers an ingenious way to navigate hierarchical mobile lists without the need to make endless, fiddly, sideways movements to multiple nested menus.

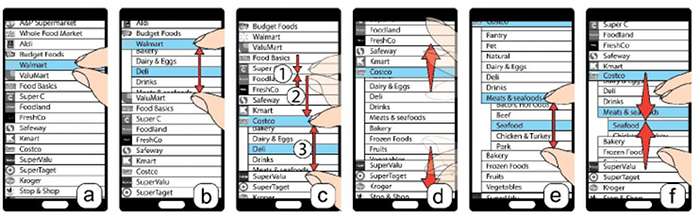

Steps of PinchList: (a) two pinched-in fingers starts browsing a list;

(b) pinching-out the fingers reveals a sub-list; (c) move the fingers

to explore another sub-list; (d) finger flicking to move the current view

to the top and bottom edges of the screen; (e) start to explore the next two layers;

(f) flicking with multiple fingers to navigate back to the previous layer.

Credit: PinchList: Leveraging Pinch Gestures for Hierarchical List Navigation on Smartphones,

CHI2019, https://xiangminf.github.io/paper/pinchlist-chi19.pdf

Developed by researchers from one Chinese and four Canadian universities, and led by Teng Han at Canada's University of Manitoba, the idea behind PinchList is that, instead of a chosen entry in a list taking you to the right to an endless succession of new views, you simply pinch the entry open (see diagram above) to reveal a new, scrollable menu beneath it. If you want to select something in there, you scroll to it and pinch it, too, and so on, so you are always in the midst of your list and know how to get back to it.

Han's team has tested this method of list browsing against others and found it generally faster, though there are problems to iron out, they admit, such as how to pinch out a menu item when it is at the bottom of a list on a phone screen. They think that making sure app code allows the list to scroll up further, even if it's the last entry, will be the answer.

Feel the Force, Ray

Getting at hard-to-reach onscreen stuff is also on CHI2019's agenda, care of Christian Corsen and colleagues at RWTH University in Aachen, Germany. Because phones have grown beyond five-inch screens, the researchers note it is often tough to reach some buttons at the top of the screen when using the phone one-handed; as when one is strap-hanging on a commute, or simply using an umbrella, say. When reachability is tough, you can lose your grip and drop the device.

In response, the researchers have developed a technique they call ForceRay that extends our single-handed reach capability.

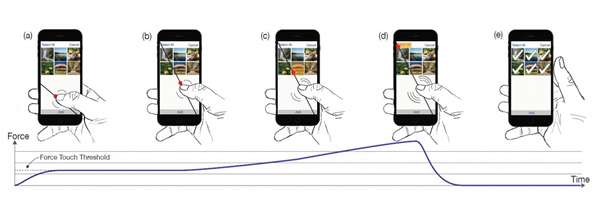

How the ForceRay interaction technique works.

Credit: Christian Corsten et al, RWTH Aachen University

To reach a distant button with ForceRay, you first press down on a non-active area of the screen, which generates a steerable line the developers called a "ray." If there is just one target button, you guide the ray over it with your thumb and let go, and it is activated. If the ray sweeps over multiple targets, however, pressing down on the ray pushes a red cursor up it, and you let go when the cursor reaches the button. You can see it how it works in this video.

Such methods could take advantage of a new cost-saving measure, too. ForceRay uses a smartphone's built-in force sensor to register how far along the ray the cursor should go, but a new technique called BaroTouch, revealed in the Journal Of Information Processing (and, in fact, previewed in a late workshop at CHI 2017) can do away with the need for a force sensor.

Instead, it simply harnesses the barometric sensor that is now resident in most smartphones to measure air pressure, and so your height above sea level, to work out how many stairs you've climbed, say, in step-counting apps. However, in an airtight, waterproof phone, the barometric sensor can also discriminate between two to six levels of touch-force on the screen, and so give apps like ForceRay even more levels of functionality. This idea could become a cheap way to compete with the three levels of pressure registered by Apple's force sensor, the TapTic ("tap" plus "haptic") engine, which registers light, medium, and firm presses, and which is behind the iPhone maker's 3D Touch capability.

The nonuser interface

If all this is making you a bit weary of smartphones, take heart: the Korea Advanced Institute for Science and Technology (KAIST) in Daejeon, South Korea, has your back. At May at CHI2019, a team led by KAIST's Jaejung Kim will be presenting some detailed results on ways to stop us from casually using our phones for instant social media gratification experiences.

Kim's team tested a bunch of smart lockout tasks, included introducing a compulsory pause of a few minutes between launching an app and it becoming active. At the end of a three-week test with 40 volunteers, the delay was discouraging 13% of 40 volunteers from using certain apps. Then they tried 10-digit and 30-digit lockout codes, which put off 27% and 47% of would-be users, respectively. The KAIST researchers believe they have more than demonstrated the effectiveness of their off-putting tricks, giving designers of overly compelling apps a scale of interventions they can adopt.

Without even a hint of irony, considering they are presenting at the pro-technology CHI 2019 conference, the researchers conclude their results provide "a new perspective toward smartphone non-use."

Paul Marks is a technology journalist, writer, and editor based in London, U.K.

No entries found